Calculating word frequency using Rapidminer

bhupendra_patil

Employee-RapidMiner, Member Posts: 168

bhupendra_patil

Employee-RapidMiner, Member Posts: 168

This article talks about a sample process to find word frequency in unstructured text mining.

The basic operators you need for building a process like this are

- Some datasource (In the example we are using Twitter, click here to see details about how to use twitter)

- Nominal to Text" . This is to change data type for the process document operator to work on. Please note that only the "Text" data type columsn are processed by the text mining extension.

- One of the "Process Documents..." operator depending on what your data source is.

- Tokenize (Splits documents into sequence of tokens)

Please see the "basic word frequency.rmp" file attached with this article to see a working example

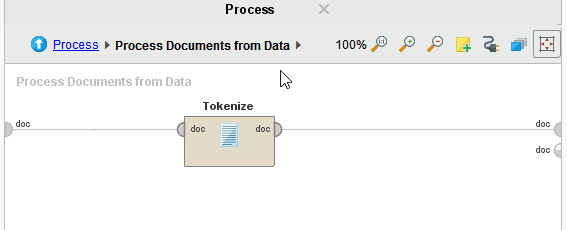

Your process would look like

Inside the Process Documents from Data will look like

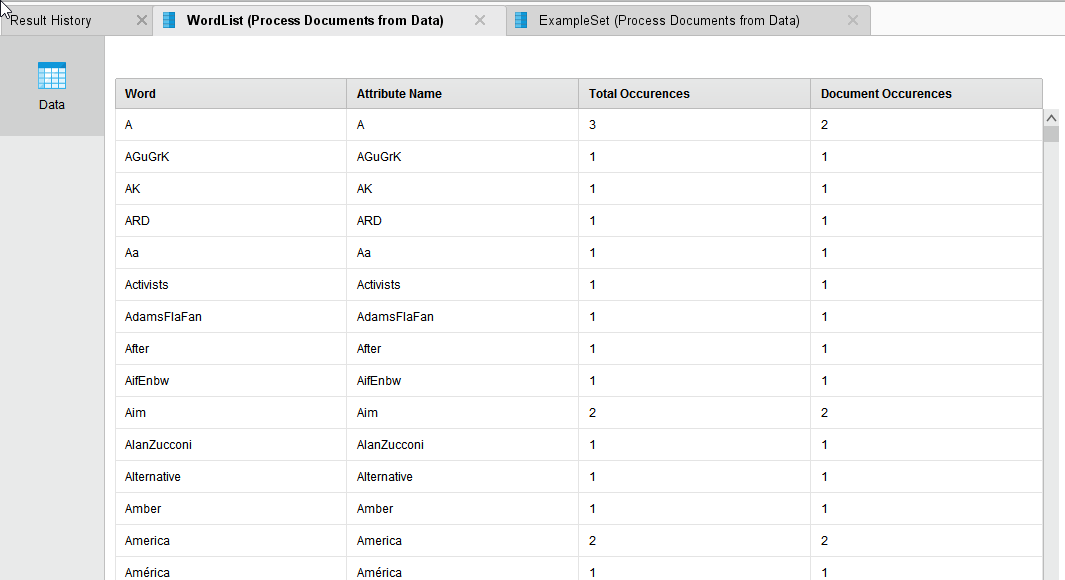

The output of this will look something like this. (Please notice that your words may appear different for the exact same process since it is actually getting the twitter data.The word frequency or the WordList output is delivered via the "Wor" port of the "Process Documents from Data" operator.

Total Occurences - Tell you how many times the word appeared across all the examples.

Document Occurences - Tells you the number of individual documents the word appeared in.

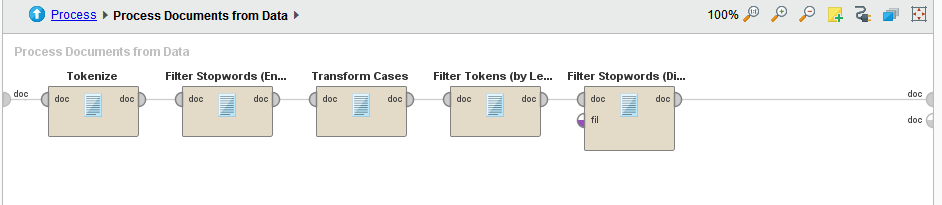

As you will notice in the output there are several unwanted words, or same words handled as two different words because of difference in cases, or there are commmon english words that you do not care about or some specific words that you may not be interested in. All of these cases can then be handled by enriching the steps taken in "Process Documents from Data". Your improved "Process Documents from Data" sub process may look somehting like below

Here are the reasons for using these operators

- Filter Stopwords(English) : This operator removes common english words like a, and, then..

- Transform cases :basically converting everything to one case i.e lower of upper

- Filter Tokens(By Length) : Removes word shorter than and longer than configured number of characters

- Filter Stopwords(Dictionary) : This operator provides the ability to drop certain words. The list can be provided by a simple text file with each words to ignore on a new line. See sample attached file

Comments

This method has been very helpful. Can you please advise as to how to Filter the total number of occurances.

For example: I want to elimnate words and phrases that only occur once in the document. (or twice, or ten times) So I only get high frequency words in my list. This would assit greatly in having a more managable list of examples.

Thanks!

Dear Jason,

this is called pruning. If you have a look on the options of the Process Documents operator you can see some ways to do it.

Best,

Martin

Dortmund, Germany

Very helpful and well explained.

I wonder whether I can also obtain multi-word occurences. That is, if the word "Super" is always followed by "Bowl" I would also like to obtain in my list the occurences of the term "Super Bowl". Same for other common expressions that are always repeated in my data, such as "nice job" or "well done".

Thanks in advance.

Dear Jesus,

it's called n-gram. If you add a n-gram operator after transform cases you well get exaclty these combinations as well. Combinations of length two are called 2-grams and are seperated by a _.

Best,

Martin

Dortmund, Germany

Hi,

I am currently using the free version of Rapidminer Studio for a research project and am attempting to replicate this procedure. My problem is that when I search for the "Process Documents From Data" operator, there are no results. I was wondering if I maybe need to update Rapidminer or to purchase this specific operator. Please let me know ASAP

Best,

Bernardo