Feature Selection Part 1: Feature Weighting

MartinLiebig

Administrator, Moderator, Employee-RapidMiner, RapidMiner Certified Analyst, RapidMiner Certified Expert, University Professor Posts: 3,533

MartinLiebig

Administrator, Moderator, Employee-RapidMiner, RapidMiner Certified Analyst, RapidMiner Certified Expert, University Professor Posts: 3,533 Feature Selection Part 1: Feature Weighting

In some use cases you may be interested in figuring out which attribute(s) are important to predict a given label. This attribute performance can be a result by itself, because it can tell you what reasons make someone or something behave in this way. In this article we will discuss common techniques to find these feature weights.

Filter Methods

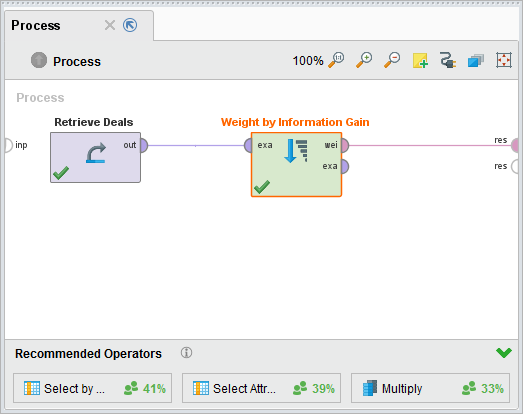

One of the most used methods to find an attribute importance is to use a statistical measure to define importance. Often used measures are Correlation, Gini Index or Information gain. In RapidMiner you can calculate these values using the Weight by Operators.

The resulting object is a weight vector. The weight vector is the central object for all feature weightening operations. If we have a look at it, it looks like this:

There are two operators which are important for the use of weight objects. Weights to Data converts this table into an example set. This can then be exported into Excel or a database.

There are two operators which are important for the use of weight objects. Weights to Data converts this table into an example set. This can then be exported into Excel or a database.

Select by Weights allows you to select attributes using this weights. You can for example select attributes only having higher weights than 0.1 or take the k top ones.

Including Non Linear Attributes and Combinations

The Filter methods above have the problem to not incorporate non-linearities. A technique to overcome this is to generate non-linear combinations of the same attribute. The operator Generate Function Set can be used to generate things like pow(Age,2) or sqrt(Age) and combination between these. This operator is usually combined with Rename by Construction to get readable names.

Handling Dependencies

Another known issue with the filter methods are dependencies between the variables. If you data set contains Age, 2xAge,3xAge and 4xAge all of them might get a height feature weight. A technique which overcomes this issue would be MRMR. MRMR is included in RapidMiner's Feature Selection Extension.

Model Based Feature Weights

Another way to get feature weights is to use a model. Some models are able to provide a weight vector by themselves. These values are telling you how important an attribute was for the learner itself. The concrete calculation scheme is different for all learners. Weight vectors are provided by these opertors:

- Linear Regression

- Generalized Linear Model

- Gradient Boosted Tree

- Support Vector Machine (only with linear kernel)

- Logistic Regression

- Logistic Regression (SVM)

It is generally advisable to tune the parameters (and choice) of these operators for a maximal prediction accuracy before taking the weights.

A special case is the Random Forest operator. A Random Forest model can be feeded into a Weight By Tree Importance operator to get a feature weight.

Feature Selection Methods

Besides Feature Weighting you can also use a Feature Selection Techniques. The difference is, that you only get a weight vector with 1 if it in the set of chosen attributes and a 0 if it is not in. The most common techniques for this are also wrapper methods namely Forward Selection and Backwards Elemination.

Polynominal Classification Problems and Clustering

In polynominal Classification problems it is often useful to do this in a one vs all fashion. This answers the question "what makes group A different from all the other"? A variation of this method includes to apply it on cluster labels to get cluster descriptions.

Evolutionary Feature Generation

Another sophisticated approach to incorporate non-linearities and also interaction terms (e.g. find a dependency like sqrt(age-weight)) is to use a evolutionary feature generation approach. The operators in RapidMiner are Yagga and Yagga2. Please have a look at the operator info for more details.

Prescriptive Analytics

Instead of generation a general feature weight you can also find individual dependencies for a single example. In this case you would vary the variables in an example and check the influence predicted by your model. A common use case is to check whether it's worth to call a customer or not by checking his individual scoring result with and without a call.

Resources

- Paper on Feature Selection Extension - http://www-ai.cs.uni-dortmund.de/PublicPublicationFiles/schowe_2011a.pdf

Dortmund, Germany

Comments

Great article @mschmitz! A question that has come up several times in my training classes is whether there is any operator to provide attribute importance for a multivariate scoring model. As you mention above, some modeling algorithms output weights directly, but many popular ones do not (e.g. decision tree, neural net, naive bayes). In those cases, I usually tell the students that they can take one of the other approaches discussed in this article (such as the "weight by" operators discussed in the first section, which speak to indepedent weight but don't address weight in the context of a specific model).

In theory, another approach is to take the list of all model attributes and remove them one at a time from the final model to see the resulting deterioration in model performance, and rank them accordingly (where the attribute that leads to the greatest decrease in performance has the highest weight, and all other attributes' weights are scaled to that). This can of course be done manually (and even done with loops to cut down on repetitive operations), but it would be nice if RapidMiner added an operator to do this automatically for any model and output the resulting table as a set of weights. Just an idea for a future product enhancement!

Lindon Ventures

Data Science Consulting from Certified RapidMiner Experts

Thanks @Telcontar120,

i aggree that there are some more methods which will be put into this article over time. Your proposal is totally fine. I often call then n-1 models. The whole behaviour is pretty similar to Backwards Elemenination.

As another point - Breiman et. al proposes not to delete them, but only "noise" one attribute during testing by shuffeling. I think this technique has some nice advantages over removing attributes (e.g. better handling of colinearities). I got somewhere a process for this... Need to search

~Martin

Dortmund, Germany

Thanks @mschmitz, and of course I realize that there really isn't a simple, singular way of answering the question "what is the variable importance in my model?" So multiple ways of answering that question may be appropriate. Nevertheless it is a very common question in my experience, so it would be nice if RapidMiner had a convenient and easy-to-explain approach to offer its users. As you call it, the "n-1" approach is quite similar to Backwards Elimination, and in fact, if the intermediate output from the existing Backward Elimination operator could be made available, then that might be a very easy way for RapidMiner to create an operator that would provide one perspective on that question. And of course if you find a relevant process and post it here, I would be quite grateful :-)

Cheers,

Brian

Lindon Ventures

Data Science Consulting from Certified RapidMiner Experts

Hi Martin,

Regarding this part:

"The operator Generate Function Set can be used to generate things like pow(Age,2) or sqrt(Age) and combination between these."

Do I get it right that if we need to overcome non-linearity usinbg this method, we also should discard original attributes after transformation (uncheck 'Keep all')? Maybe you have an example of the whole process?

Thanks!

Vladimir

http://whatthefraud.wtf

hi

please help me

Moritz

I would like to know if these methods are implemented to execute in a parallelized manner.

Especially because some of these methods do not scale well with increasing data sizes.