The Altair Community is migrating to a new platform to provide a better experience for you. In preparation for the migration, the Altair Community is on read-only mode from October 28 - November 6, 2024. Technical support via cases will continue to work as is. For any urgent requests from Students/Faculty members, please submit the form linked here

Decision Tree Validation

Contributor I

Contributor I

Hello experts!

I'm a newby in rapidminer . Can anyone help me how to illustrate the following diagram with rapidminer???

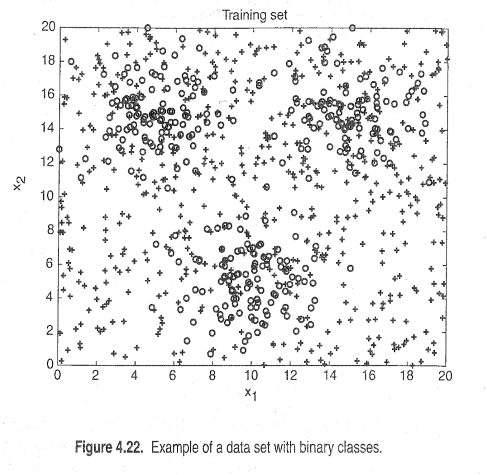

I generated 3000 datapoints with python.my datapoins belong to two different classes. class one is generated from a mixture of three gaussian distributions and class two is generated from a uniform distribution. 1200 datapoins for class one and 1800 datapoins for class two. 30% of datapoints are chosen for training,while the remaining 70% are used for testing. I used a decision tree classifier .

I'm sorry if this is not the correct place for post this doubt, but like I said before, I'm new here.

Thanks for the attention!

Tagged:

0

Answers

You would use an "optimize parameters" operator that iterates over the number of tree nodes. Then use the "log" operator to preserve the performance measure and the number of nodes. In theory, if you do this with a "split data" operator and observe the outcome on a single model training set (with no cross validation) then you'll observe that your error rate only decreases as you add more nodes. Then run it again on your test set inside a cross-validation and you will see that error decreases at first given more nodes but then increases at some point once you get into the complexity region of overfit trees. The specific results will depend on your data and won't look exactly like the attached graphic but the same general pattern should be true.

Lindon Ventures

Data Science Consulting from Certified RapidMiner Experts

where is wrong???

If you can post the process itself rather than the screenshots it is easier to troubleshoot. You can go to the file menu and then choose "export process" and it will output a file called something.rmp which is a small file containing the full process.

Lindon Ventures

Data Science Consulting from Certified RapidMiner Experts

I don't have access to your data, so I modified the process using the sample Titanic dataset. This dataset is too small to see much of a difference in the tree performance here, but the setup of this process is now correct. If you do this on a larger dataset you should see more of a divergence between training vs testing error as shown in the original graphic. The training error only goes down. The testing error goes down but then goes back up once overfitting occurs.

Lindon Ventures

Data Science Consulting from Certified RapidMiner Experts