Exploring Model Management in RapidMiner

Unicorn

Unicorn

By: Fabian Temme, PhD

Editor's Note: Fabian shares some of his experiences with creating Model Management Applications in RapidMiner Server as part of his daily Data Science work.

I recently reached a point in my daily work for the PRESED project (Predictive Sensor Data mining for Product Quality Improvement, www.presed.eu) within the funded R&D Research Team at RapidMiner where I needed to put something in production. This probably sounds familiar for many data scientists after they found their insight.

It all started like the usual way. I did some complex preprocessing where I transformed my data into a nice table. Once I had hundreds to thousands of examples with their attributes and assigned labels I started training models, validating their performances, with the intent to bring them into production.

Here's the problem. Which model do I choose to put into production?

RapidMiner offers a great variety of different models, and also the possibility to combine them (for example by grouping, stacking or boosting). But I still had to answer the question, which one?

I decided to test several models and needed an easy way to visualize and test the different models. I wanted to do a "model bake-off" and here's how I did it.

For this example we'll use the Sonar data sample provided in RapidMiner and start with a typical standard classification process:

Here I retrieved the input data, traineda Random Forest inside a Cross Validation operator to extract a final model with an average performance from the cross validation. I then store the model and the performance vector inside a 'Results' folder in my repository (see below). I used a macro (process/global variabel) to define a name (in this case 'Random Forest') for the model and store the results in a subfolder with this name.

For another model I could simply copy the process, exchange the algorithm, use the macro to automatically name the model, and then hit run. I do this over a few times for each algorithm I want to use.

This is how my 'Results' folder looked like.

But how easy is it to compare the different methods and do it automatically?

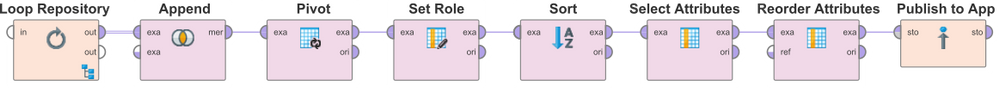

For that I designed a simple Web App on the RapidMiner Server I was working on. First I needed a process to loop over the 'Results' folder, automatically retrieves the performance vectors and transforms them to one ExampleSet. With the 'Publish to App' Operator I made the ExampleSet accessible by the new Web App.

Switching back to the App Designer I added two visualization components both subscribing to the ExampleSet published by my process.

The first component is using a 'Chart (HTML5)' format with the Chart-Type 'series' to show a graph of the results, the other the 'table' format to show the results directly.

A button which reruns the publishing process finalized the Web App.

This is the resulting view.

Done! These processes can easily be adapted to test more algorithms and then visually display them with any performance vector results you want to see.

PS: Check out the attached zip file for process examples!