Great News! RapidMiner has a new Data Core!

Unicorn

Unicorn

By Jesus Puente, PhD.

Let’s start from the beginning: what is a data core?

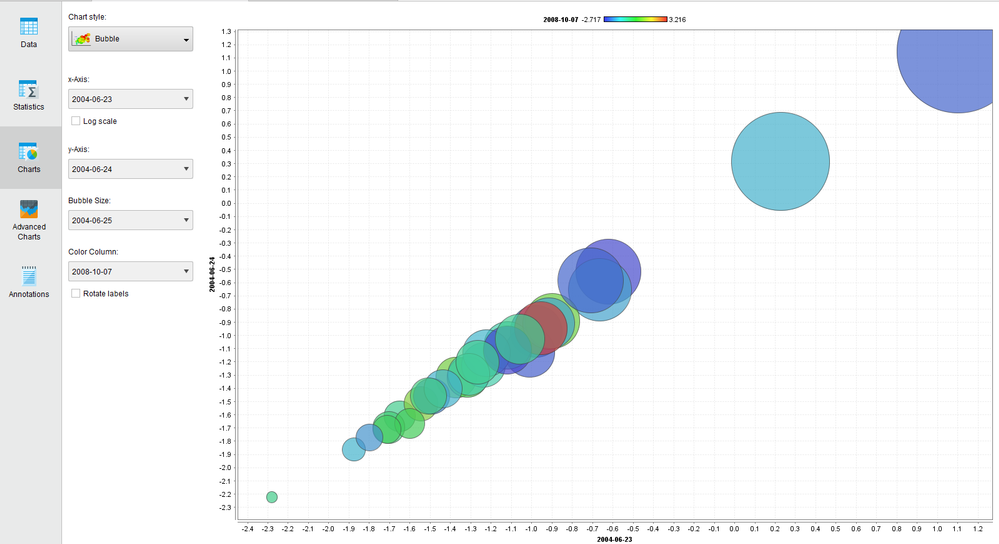

The data core is the component that manages the data inside any RapidMiner process. When you “ingest” data into a process from any data source (database, Excel file, Twitter, etc.) it is always converted into what we call an ExampleSet. No matter which format it had before, inside RapidMiner data always has a tabular form with Attributes being columns and Examples being rows. Because anything can be added to an ExampleSet, from integers to text or documents, the way this table is internally handled is very important and it has a lot of impact in how much data one can process and how fast. Well, that is exactly what the Data Core does: it keeps the data in the memory taking types and characteristics into account and making sure memory is effectively used.

Fig1. An ExampleSet

Fig1. An ExampleSet

Fig 2. Another representation of the same ExampleSet

Fig 2. Another representation of the same ExampleSet

Yes, but, how does it affect me?

Well, the more efficient the Data Core is managing memory, the larger ExampleSets you can use in your processes. And, as an additional consequence, some processes can get much faster buy improving access to elements of the ExampleSet.

Can you give an example?

Sure! There are different use cases, one of them is sparse data. By that, we mean data which is mostly zeros and only a few meaningful numbers here and there. Let’s imagine you run a market basket analysis in a supermarket chain. You have lots of customer receipts on one hand and lots of products and brands in your shelves on the other. If you want to represent that in a table, you end up with a matrix of mostly zeros. The reason is that most people only buy a few products from you, so most buyer-product combinations have a zero in the table. That doesn’t mean that your table is useless, on the contrary! It contains all the information you need.

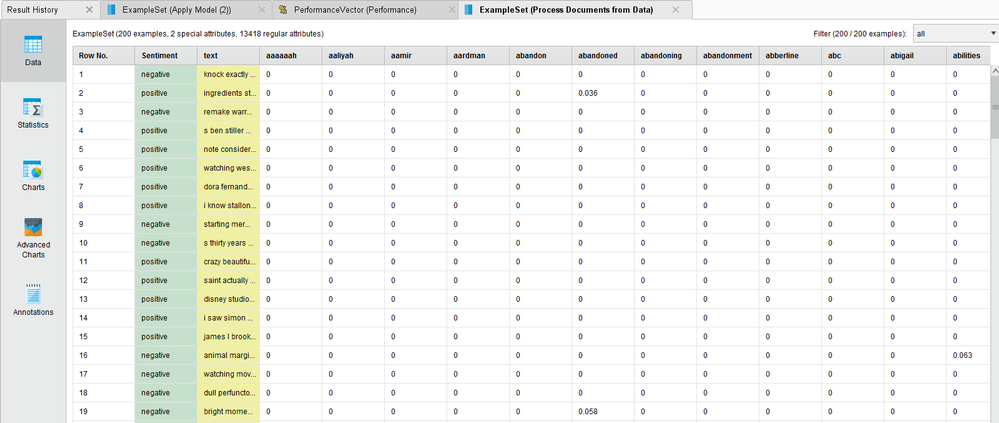

Another example is text processing. Sometimes you end up with a table whose columns (i.e. Attributes) are the words that appear in the texts and the rows (i.e. Examples) are the sentences. Obviously, each sentence only contains a few words so, again, most word-sentence combinations have a zero in their cells.

Fig 3. Sparse data

Fig 3. Sparse data

Well, RapidMiner’s new Data Core automatically detects sparse data and greatly decreases the memory footprint of those tables. A much more compressed internal representation is used and the ExampleSets become easier to handle and processes are speeded up.

Another use case is related to categorical (nominal) data in general. Even in the “dense” (non-sparse) case, data sizes within cells in a table can vary a lot. Integers are small in terms of memory use, while text can be much bigger. The new DataCore is also optimizing the representation of this kind of data, allowing for very heterogeneous ExampleSets without unnecessarily wasting memory.

Tell me more!

As often in life, in some cases, there is a tradeoff between speed and memory usage. Operators like Read CSV and Materialize now have an option to be speed-optimized, auto or memory-optimized. These options allow the user to choose between a faster, but potentially more memory intensive data management, or a more compact but probably slower representation. Auto, of course, decides automatically based on the properties of the data. This is the default and recommended option.

Columnar representation

The representation of data within the new data core is based on columns (Attributes) instead of rows (Examples). This improves performance especially whenever the data transformation is based on columns, which is the most common case in data science. Some examples are:

Data generation

In many processes, it’s necessary to generate new data from the existing columns. The Generate Data operator does that. Also, loops and optimization operators often create temporary attributes. The new data core also provides a nice optimization of these use cases by handling the new data in a new, much more performant way.

Loop attributes, then values

In many data preparation processes, attributes are changed, re-calculated or used in various ways. The columnar representation is ideal for this.

Extensions

We have already mentioned text use cases. It’s not pointless to mention that Text Processing and other extensions already benefit from the new core. Moreover, we have published the data core API for any of the extension developers in our community to adapt their existing extensions or create new ones that use the improved mechanism.

Time for some numbers, how good is the improvement?

As it should have become clear in the past paragraphs, the degree of improvement is quite dependent on the use case. Some processes will benefit a lot and others not so much.

As a benchmarking case, we have chosen a web clickstream use case. We start with a table that contains user web activity. Each row is composed of a user ID, a ‘click’ (a URL) and a timestamp. One of the typical transformations that one would like to do is to move from an event-based table to a user-based table. Just as an example, we’ll transform the data to get a table with all users and the maximum duration of their sessions. This is a process that needs a lot of data shuffling, looping on values and, even for a relatively small data set, it can take a lot of time.

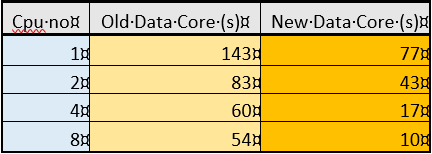

Let’s start with some small amount of data: 10,000 examples. I ran the process on my 8-core laptop with 32 GB of RAM. These are the results (runtimes in seconds) by threads used for parallelization.

Fig 4 - Benchmark results

Fig 4 - Benchmark results

With a single core (what’s available in the Free license), the new Data Core already provides 2x performance. As more cores are used, the times get smaller and smaller. See numbers below: with the old core using 1 thread, the job took more than 2 minutes to complete and, now with the parallelization and the new data core, it only takes 10 seconds!

Fig. 5 - Benchmark data

Fig. 5 - Benchmark data

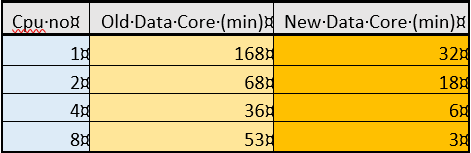

In this case, the new data core helped improving performance. However, the data core is all about memory and we’ll see that in the next example. Let’s run the same process, but with a 5 times larger data set (50,000 rows). Take a look at the numbers:

Fig. 6 Benchmark data (larger data set)

Fig. 6 Benchmark data (larger data set)

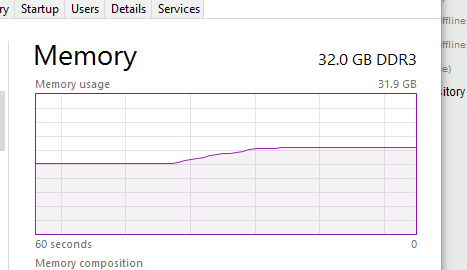

This time runtimes are in minutes. As you can see, the new data core pattern is similar to that in the previous example. It’s more data, so it takes more time, but times are reasonable. However, with the old data core, times simple blow up. And here’s the reason:

Figure 7

Figure 7

Very soon, my 32GB of main memory are fully used and everything gets extremely slow. The same process with the new data core looks like this:

Fig 8

Fig 8

It never goes beyond 65%. Therefore, the new data core allows you to work with data set sizes which were unmanageable before given a certain memory size.

Conclusion

RapidMiner’s new data core is a big thing. It improves data and memory management and it allows you to work with much bigger data sets keeping your memory demand at bay.

It’s already available as a beta. Try it NOW!