How to apply different neural network model inside loop attribute?

Maven

Maven

hello everyone, im working with neural network for forecasting

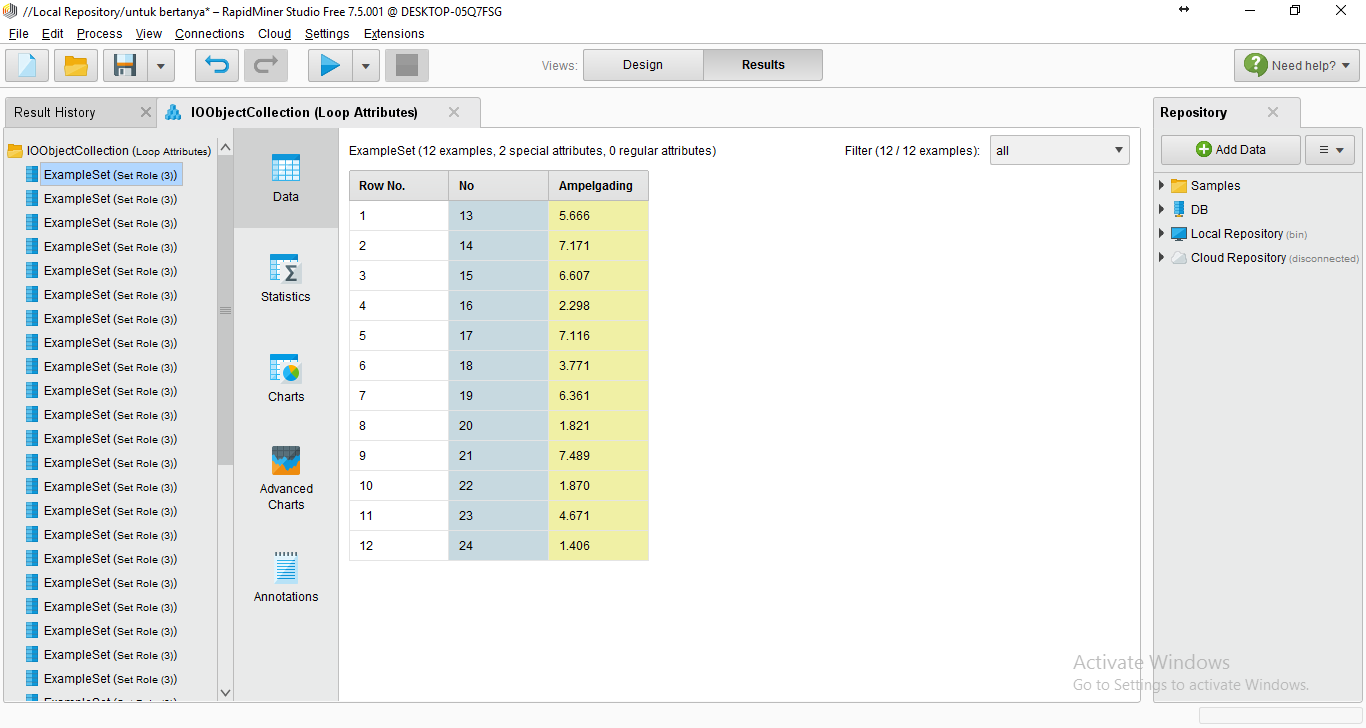

in my project, i got IO Object Collection that contain example sets from loop attributes.

Example Sets on IO Object Collection

Example Sets on IO Object Collection

Inside loop attributes, i put validation operators to forecast with neural network operator so each example set have their own prediction. sliding window validation

sliding window validation

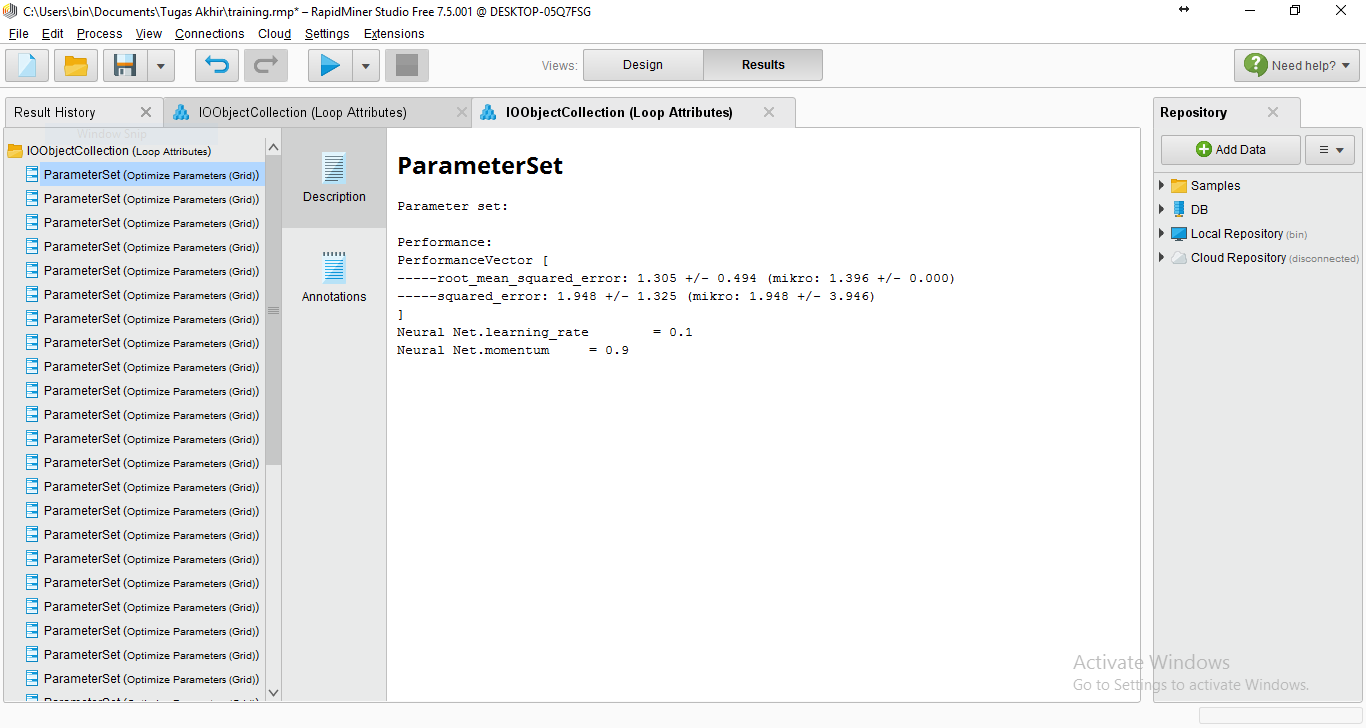

To get the best model for each example set, i used optimization (grid) operator and each example set got their own best model (different training cycle, learning rate and momentum). Result on Optimization Parameter Grid (on each example)

Result on Optimization Parameter Grid (on each example)

When i got their own best model, how do i apply the result of optimization parameter to each example sets in my process? because if i put it on neural net operator, it only apply an model. inside validation operator

inside validation operator

Best Answers

-

binsetyawan

Member Posts: 46

binsetyawan

Member Posts: 46  Maven

Maven

excuse me sir, when i put write model operator after optimize parameter (grid) operator, is the model that come out from mod port is the best model? if its yes, the model name parameter i set test_%{loop_attribute} and the result is i got .mod file for each loop, so i can apply it with read model and connect it to apply model for testing

0

0 -

Thomas_Ott

RapidMiner Certified Analyst, RapidMiner Certified Expert, Member Posts: 1,761

Thomas_Ott

RapidMiner Certified Analyst, RapidMiner Certified Expert, Member Posts: 1,761  Unicorn

Unicorn

That is correct, the model that comes out of the Optimize Parameters operator is the best model.

0

Answers

Couldn't you store each model right after the validation and save it with a macro? like model_%{loop_attribute} ? This way you can store each iteration of the model and then use it to score your individual data sets later.

yeah, i've tried the macro to save the model, i put it adter validation operator, but the name of the model is always overwrite and i dont know what values should i fill on it. so its not possible to appy if they have different amount of nodes in hidden layer?

To prevent it from overwriting, you need to use a valid macro. I choose %{loop_attribute} because it's already defined by the Loop Attribiutes operator. Another option is to use %{t} which is the timestamp when it gets executed.

im sorry i still confusing with your explanation

You can save the model after each iteration provided that you append the model name with a macro like the timestamp. You would need to save it as "mymodel_%{t}" this way it doesn't overwrite it.

instead of using macro, is it possible if i made a list of value parameter of ANN model for each example sets and apply it in my process and made each example sets recognize their model? i've tried write parameter operator but the problem is the values overwrite with the latest loop_attribute and if the problem resolved how to apply the result of write parameter operator on my process?

Have you thought of using the Recall and Remember operator? You might be able to load in the write parameters in a loop, Remember them for the one iteration and then load in the second one for hte second iteration.

Sorry, I'm more confused

I'm going to edit my topic and add pictures to make it easy to understand and know where to add operator

and i didn't find any solution yet im so confused

im so confused

An optimized model with the LR and MOM values are exported with each parameter optimization. I see that you have a lot of them in your output because of the loop and using them is going to be tricky but I don't think that hard.

I would consider scoring your data right after the parameter optimization. In this way you would loop your training and optimization and then simulatenously score your data inside the loop, then save each score set with a macro or use annotation to differeniate them.

yeah i got lot of them because its the best parameter for each example set that i got from loop and join. but i need to know the trick to apply the model that i got because it is the core of the project.

Should i really use macro operator? because when i got best model from optimization parameter operator, i will remove optimization parameter operator and swtich with validation operator which was previously inside optimization parameter operator and apply the model that i got and apply the mod port to data testing.

up

so... its not possible?

It's probably possible but very complex. I would post your process and data here and see if someone wants to take up the challenge. Actually, we're starting data challenges if you want to throw out a prize like here: http://community.rapidminer.com/t5/General-Chit-Chat/RapidMiner-Data-Modeling-CHALLENGE-200-in-cash-and-prizes/m-p/39159

I just realized you did post your process and data.

Something like this doesn't work?

ah im sorry it isn't

what im looking for is how to apply different parameter values that i got (TC, LR, MOM) for each example sets to neural net operator

i would love to do thing like that if i have more money :smileysad:

I think the answer is to use Set Macros for your MOM, LR, etc.

okay, i know how the grand design from your recommendation... but when you put 2 macros at there, i ithink its similiar with when the validation is inside the optimization parameter and optimize neural net parameter or my something wrong with my understanding?

so i have to run the model to get the best model (which it need much time) and store the model after that for testing i use retrieve operator ro apply the model? hmm somehow, the best model that i've found is useless and cant be applied :catsad: :smileymad:

and for testing, my data only 12 row (2016, monthly), for windowing operator, parameter that i use is 12 for window size, 1 step size and 1 for horizon. i want to know how good is the performance is so i put performance operator after apply model, but it comes to error, it said that there is no label, so i edit on windowing operator to create label but it comes error too, it said that the examples is low 12+1=13 but the examples only 12.

how i can measure the performance for testing? @Thomas_Ott

To test the performance you will need a label. Usually we do this via a Validation type operator. With respect to your data set, you don't have enough testing data.

i see, so that is the problem...

may be i should add more data for testing 2015+2016= 24 row, window size 12, step size 1 and horizon 12 to forecast 2017