A New User Journey - Part 2

Unicorn

Unicorn

By: Nithin Mahesh

In my last post, I gave an introduction of my work at RapidMiner for this summer and the Churn data science project I was given to work on. I touched briefly on the data set provided by the KDD Cup 2009 from the French Telecom Company Orange’s large marketing database. In this post, I will talk about how I began to learn RapidMiner starting with my data prep.

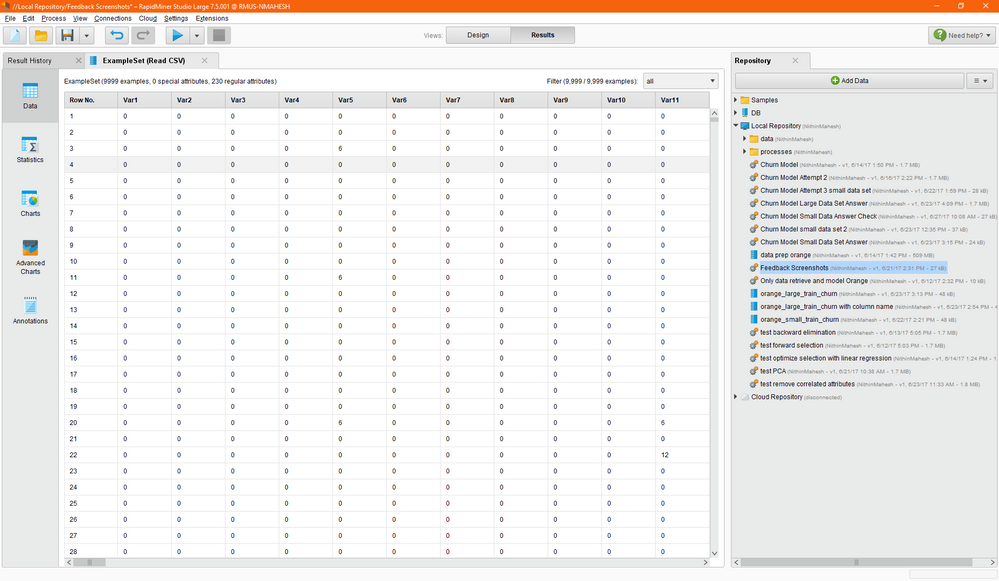

The first challenge was figuring out which models and prep were needed, since the majority of the data contained numeric values. Categorizing them was hard! Here is the data I was given once I uploaded it into RapidMiner Studio:

Before attempting any data prep, I opened the RapidMiner Studio tutorials under the create process button -> then learn as shown below:

This was useful to understand how to navigate the software and the basic flow necessary to run analytics on a data set. The RapidMiner website provided some good introductory getting started videos that were very useful. After playing around with several tutorials I had a basic knowledge of how things worked and began to plan prepping this data set. In the same create new process section there are many templates that can be run to see examples of the analytics you may use on your data. In my case I was looking at customer churn rate and found a template running analysis on a data set to find whether a customer was true for turning over or false for not.

After going through some tutorials, I was ready to import the data and began by clicking the add data button, but ran into some errors. I found that the read csv operator was much more powerful and ended up using this instead, despite the data having an odd file type (.chunk). Initially I had some issues with how the data was being spaced and realized this was due to not configuring the right parameter in import wizard. After getting the data in and connecting it to the results port I started to plan how to organize the random numeric values. First thing I noticed was the set contained many missing values indicated by “?” so I needed to use the replace missing values operator, which I then set the parameters to replace these values with zero, I later changed this to average these values instead.

I then downloaded and imported the label data using the using the add data button then joined the data as shown below:

This gave me an error since I needed to create a new column to add in the label data. I tried using the append operator which I learned after some playing around was for merging example sets. I eventually found out the generate ID operator is the right one to use to create a new column.

When preparing data, it is useful to split it into train and test sets, this way we can train and tune the model with the training set. Once this is done we can test the training set on how well it generalizes to data not seen before with the test set data. One thing I was unaware of is RapidMiner Studio contains many operators that combine multiple steps into a single one. The sub process panels are the most powerful within these types of operators allowing me to perform a lot of analytics all in one go as shown below:

Splitting data into test and train sets, running models, and running performance can all be done using a single operator. One can run multiple processes that in R would take a couple of steps to complete. In my case, cross validation seemed like the best option since I needed to split my data to train/test then check my models accuracy, precision, and performance.

At this point in my data prep I ran into a couple of problems that comes along with using a new software for the first time. Since I was working with such a large data set running the cross validation, which is a big process, I did not have enough memory to run it. When this occurs it’s best to either narrow down the number of operators used or try to reduce the number of attributes. Using the remove useless attributes operator, I could cut down some features that were not being used. Some other useful operators were the free memory and the filter examples operators.

In my next post, I will talk about my results (or lack of), what issues I faced, how I went about solving them, and what I found to be the most useful features in RapidMiner Studio.