A New User Journey - Part 3

Unicorn

Unicorn

By: Nithin Mahesh

In my last post, I talked about how I began prepping my data, some of the operators I used, and some of the issues I ran into. In this post, I will talk briefly about my results and some of the most useful features in RapidMiner Studio.

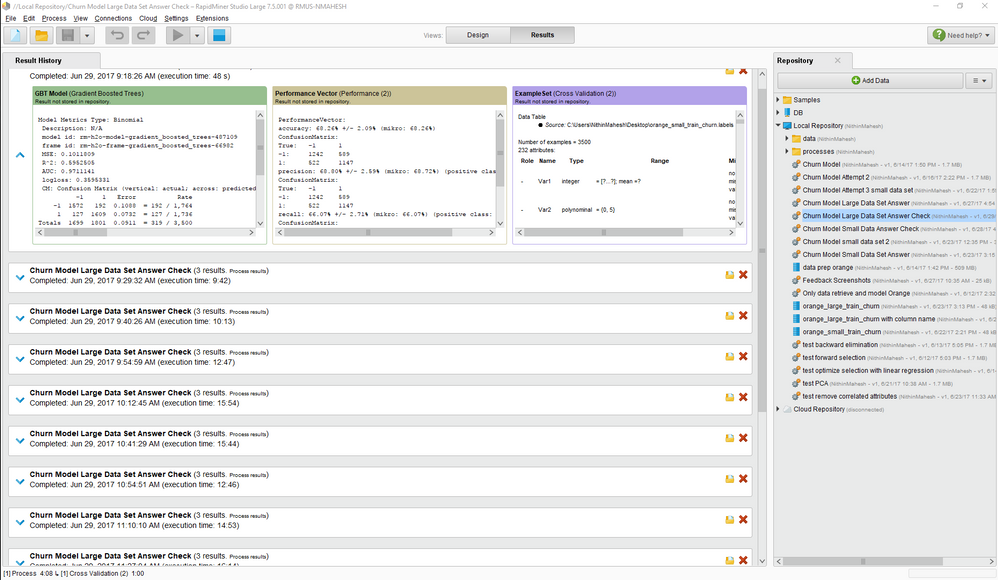

As I mentioned last week, I ended up not getting the results I wanted. Even after running different validations on my data including cross validation. I kept getting an AUC of about 0.519 which is really bad compared to the results of IBM Research that were at 0.7611.

A couple of small things to consider that I wish I had started with before jumping into the data set. I found signing up for the workshop earlier would have been helpful; this gave me a nice review on how to import, prep, model, and interpret my data. The instructor was good at answering any questions I had and it helped a lot that the workshop was interactive. I also picked up a lot of simple productivity features such as how to disable unused operators or how to organize my operators so they weren’t all over the screen. Another feature I learned later about was the background process feature, worth looking at, for commercial users that gives one the ability to work on other processes while running some in the meantime.

Viewing your data table can also be a challenge at times, if you’ve ever run any processes on RapidMiner you have probably ran into the issue of not being able to view your data table after closing the results, application, or after running another process (shown below).

It took me a bit to realize that breakpoints let one view the table at any operator in the process, which is really useful to debug and view changes to your set. This can be done by right clicking on an operator as shown below:

After a lot of data prep, I ran some models and validations as mentioned in the last post. The problem with this was that my data prep was very process intensive and despite having access to all the cores of my computer I ran into hours of loading time before even getting to my models. I learned later that there is a way to cut down the time using the multiply and store operators meaning I essentially took a copy of my data prep (multiply) and then stored it (store). I then created a new process in which I used a retrieve operator to grab the data prep. In my new process, I could run my cross validation and models without having to reload all my data prep, which saved me some hours of waiting time. One thing to note was that any time I changed a parameter in data prep I would have to run that process again so that the model process had the change.

This brings me to another important feature to keep in mind, the logs. With a large data set some of the validations I ran would take too long to load. I would wait for these for hours just to get an error telling me the computer was out of memory. I eventually found the logs, under view then show panel, which gave me warning errors during the process, so I wouldn’t have to waste time for the process to eventually end.

The help tab on RapidMiner Studio is another useful resource that gives a nice overview of all the parameters and their functions for any of the hundreds of operators there are. The documentation includes links to hands on tutorials right in RapidMiner under the Tutorial Process. RapidMiner’s Wisdom of Crowds feature was another useful feature within Studio, great for finding operators that would be the most useful for that task, especially when I was unsure of what to use. The community page was the next best resource, any specific questions I had were either mentioned in past posts or I could make my own post. The response time was quick to any questions I posted as well!

In my next post, I will talk about my end results and what I did to my data prep to finally get the AUC I was looking for.