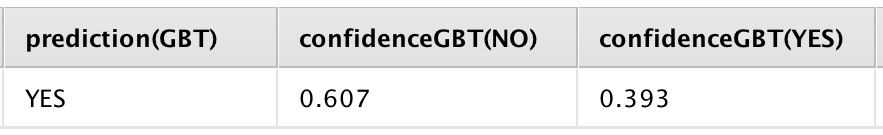

GBT confidence value vs. prediction

Hallo community,

I am currently experimenting a bit with gradient boosted trees for some classification tasks. It became one of my favorite models a while ago, due to speed and good prediction results and I thought I really understood what was going on. However, in my recent experiments I regularly get an output on a class prediction that looks like the image below:

There are two classes (YES and NO) and the prediction accuracy is overall quite good. Nevertheless, what irritates me is that by looking at the confidence levels for the two classes, I would expect a different prediction for some examples. In the example above the confidence for a YES prediction is 39.3%, whereas for NO it is 60.7%. Why does the model then predict (correctly) an outcome of YES?

Any insights on or references to how the confidence levels are calculated in GBTs would be highly appreciated.

Thank you.

Unicorn

Unicorn

Answers

Hey FBT,

which version of RapidMiner are you running?

H2O models (GBT, GLM & LogReg) have the special feature of adapting their own threshold. This is similar to the Optimize Threshold operator but it uses F1-Measure to optimize its own threshold.

We encountered this - for RM users - slightly irritating behavior for LogReg 1 or 2 versions ago. I thought we fixed this for all h2o models.

Cheers,

Martin

Dortmund, Germany

Hi Martin,

thanks for the response. I am still on 7.5.003, but will upgrade shortly and see, if it still persists.

Ok, I have now updated to 7.6001 and can confirm that I still get GBT predictions that are non-intuitive with respect to the confidence values. This is not critical in my specific current case, but it may throw off somebody who is using the confidence levels as thresholds. Interestingly, the values do differ significantly compared to the ones I have seen in RM 7.5003 with the identical input and model.

I might add my 5 cents that I also have encountered this type of behaviour couple of times with GBT and RM v. 7.6: confidence lower than 0.5 still was leading to 'TRUE' prediction.

Vladimir

http://whatthefraud.wtf

I am also seeing this, is there a way to resolve this issue. because i feel confidence is giving the right predictiion Vs Predictied status for me. I would like to know more in detail. If any one knows, please let us know.

Thanks for starting this thread.

Shraddha

Hi,

you can always manually fix this with a Create Threshold+Apply Threshold.

~Martin

Dortmund, Germany