"Synonym Detection with Word2Vec"

MartinLiebig

Administrator, Moderator, Employee-RapidMiner, RapidMiner Certified Analyst, RapidMiner Certified Expert, University Professor Posts: 3,533

MartinLiebig

Administrator, Moderator, Employee-RapidMiner, RapidMiner Certified Analyst, RapidMiner Certified Expert, University Professor Posts: 3,533 Introducing the Word2Vec Extension to the RapidMiner Marketplace!

We recently published a new extension on our marketplace: an advanced algorithm for text mining called Word2Vec. The core operator is called Word2Vec and can be thought of as a learner. In the following I will shortly explain the basics of what Word2Vec does and afterwards how you can use this in your RapidMiner text mining processes.

What is Word2Vec

One of the key problems of text mining is that distances between words are hard to define. One could also say: "It’s hard to do math with words by itself in anyway." For example, there are words like beautiful and gorgeous, which have similar meanings but are spelled very differently. How should an algorithm know that "beautiful" and "gorgeous" have the same meaning? Or do they have similar connotations but have different meanings?

Word2Vec is a word vector algorithm which attempts to tackle this problem. As the title implies, this operator takes a word and turns it into a vector. So how is so special about Word2Vec? The cool part is that this new Word2Vec vector can be associated with the “meaning” of a word. For example:

1. Let's take a sentence from raw text: RapidMiner has a new extension called Word2Vec

2. Now let's 'window' our sentence and always leave out the word in the middle:

RapidMiner has ___ new extension

has a ___extension called

new extension ___ Word2Vec

3. Word2Vec defines a probability P for the for the missing word, depending on the surrounding words. In fact, Word2Vec assigns a vector for every word. The whole trick of Word2Vec is that it optimises all vector entries to maximize the probability for the correct gap words and minimizes it for others. This way it assigns a vector to every word.

Sample Process with Word2Vec

There are various ways to use Word2Vec as a useful addition to your data science processes. In this sample process we will create a custom stemming dictionary from TripAdvisor review data (available here). All depicted processes are attached to this post.

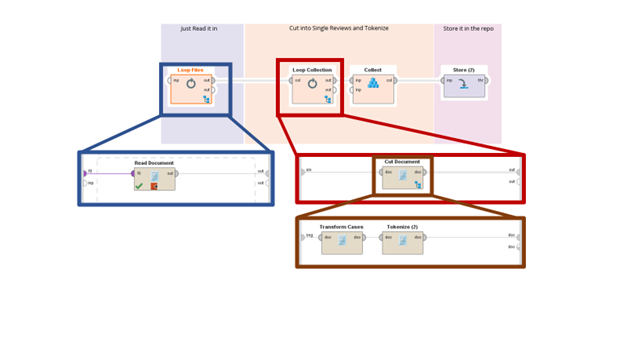

Our analysis is split in three parts. The first part reads in the data and transforms it into a collection of documents. Each document is already tokenized. The second process will then create a Word2Vec model on it, and the final third model is generating a stemming dictionary.

Step 1: Read and Tokenize

The data is provided in one flat file for each hotel with the following structure:

<Overall Rating>4

<Avg. Price>$302

<URL>http://www.tripadvisor.com/ShowUserReviews-g60878-d100504-r22932337-Hotel_Monaco_Seattle_a_Kimpton_Hotel-Seattle_Washington.html

<Author>selizabethm

<Content>Wonderful time- even with the snow! What a great experience! From the goldfish in the room (which my daughter loved) to the fact that the valet parking staff who put on my chains on for me it was fabulous. The staff was attentive and went above and beyond to make our stay enjoyable. Oh, and about the parking: the charge is about what you would pay at any garage or lot- and I bet they wouldn't help you out in the snow!

<Date>Dec 23, 2008

<No. Reader>-1

<No. Helpful>-1

<Overall>5

<Value>4

<Rooms>5

<Location>5

<Cleanliness>5

<Check in / front desk>5

<Service>5

<Business service>-1

We read all files in with a Loop Files + Read Document combination, and then loop over all documents to extract only the content with a Cut Document operator. In the Cut Document we quickly transform all tokens to lower case and tokenize our document. After flattening the collection to one straight collection of documents, we store it in our repository for later use.

Read In Process

Read In Process

Step 2: Train the Model

Training a Word2Vec model is straightforward: get the data, apply Word2Vec, and store the result. The layer size, which defines the length of one vector, is set to a moderate 100 and the window size is set to 7. The iterations parameter is set to a high 50, which should ensure convergence. Training Process

Training Process

Step 3: Building the Stemming Dictionary

Building the final dictionary needs a tiny bit of postprocesseing. The new operator Extract Vocabulary is able to extract vectors for all or parts of the used corpus. Using Cross distance it is possible to get the distance between to word vectors measured in cosine similiary.

In the postpocessing we first need to remove duplicates of words which were created in the cross distance.

Afterwards there is a different type of duplicates. These are the ones were the first word in the first example equals the second word in the second example and vice versa.

Word1 Word2

Gorgeous Beautiful

Beautiful Gorgeous

The final processing process with a postprocessing which creates a stemming dictionary

The final processing process with a postprocessing which creates a stemming dictionary

Finally we apply a threshold on the similarity to produce a well-pruned list. This is controlled with a macro and can thus also be used from the outside. The only thing we need to make sure is that a word is not a synonym more than once. We can do this by removing some additional duplicates.

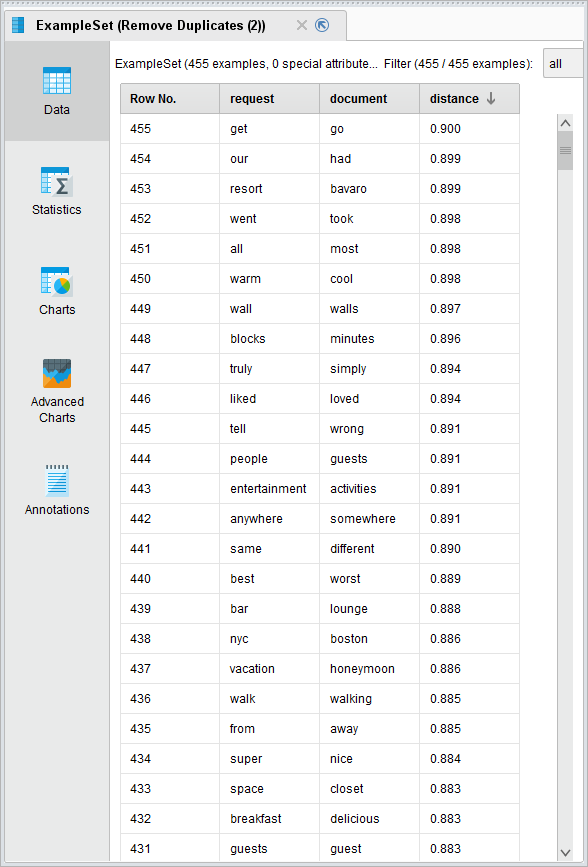

Let's have a look at the results! Examples for found synonymsIf you examine the results you can see some obvious similarities like wall and walls, and some more clever synonyms like people and guests, anywhere and somewhere.

Examples for found synonymsIf you examine the results you can see some obvious similarities like wall and walls, and some more clever synonyms like people and guests, anywhere and somewhere.

Where it gets interesting are that sometimes words with opposite meanings are considered synonyms (best-worst, warm-cool etc). This is due to the way Word2Vec works in that these words can be put into the same gaps – hence considered similar to each other. Depending on the task you do this can be useful (e.g. topic recognition) or detrimental (e.g. sentiment analysis). For the latter you need to manually walk through the result list and prune more.

As a last step we can use an Aggregate operator in combination with a Generate Attributes operator to generate regular expressions. For example:

|

amazing:awesome |

|

american:european |

|

amsterdam:berlin |

|

and:very|with |

|

another:later |

|

anywhere:somewhere |

|

appointed:maintained |

|

area:areas |

|

arrived:checked|arrival |

|

asked:requested|ask |

The format can be used on any document you have. The operator for this is called “Stem Tokens using Example Set” and is part of Operator Toolbox extension.

Where can I learn more?

Dortmund, Germany

Answers

I've been waiting for this for a long time.

I think the next step should be Doc2Vec.