Synonym Detection with Word2Vec

MartinLiebig

Administrator, Moderator, Employee-RapidMiner, RapidMiner Certified Analyst, RapidMiner Certified Expert, University Professor Posts: 3,533

MartinLiebig

Administrator, Moderator, Employee-RapidMiner, RapidMiner Certified Analyst, RapidMiner Certified Expert, University Professor Posts: 3,533 Introducing the Word2Vec Extension to the RapidMiner Marketplace!

We recently published a new extension on our marketplace: an advanced algorithm for text mining called Word2Vec. The core operator is called Word2Vec and can be thought of as a learner. In the following I will shortly explain the basics of what Word2Vec does and afterwards how you can use this in your RapidMiner text mining processes.

What is Word2Vec

One of the key problems of text mining is that distances between words are hard to define. One could also say: "It’s hard to do math with words by itself in anyway." For example, there are words like beautiful and gorgeous, which have similar meanings but are spelled very differently. How should an algorithm know that "beautiful" and "gorgeous" have the same meaning? Or do they have similar connotations but have different meanings?

Word2Vec is a word vector algorithm which attempts to tackle this problem. As the title implies, this operator takes a word and turns it into a vector. So how is so special about Word2Vec? The cool part is that this new Word2Vec vector can be associated with the “meaning” of a word. For example:

1. Let's take a sentence from raw text: RapidMiner has a new extension called Word2Vec

2. Now let's 'window' our sentence and always leave out the word in the middle:

RapidMiner has ___ new extension

has a ___extension called

new extension ___ Word2Vec

3. Word2Vec defines a probability P for the for the missing word, depending on the surrounding words. In fact, Word2Vec assigns a vector for every word. The whole trick of Word2Vec is that it optimises all vector entries to maximize the probability for the correct gap words and minimizes it for others. This way it assigns a vector to every word.

Sample Process with Word2Vec

There are various ways to use Word2Vec as a useful addition to your data science processes. In this sample process we will create a custom stemming dictionary from TripAdvisor review data (available here). All depicted processes are attached to this post.

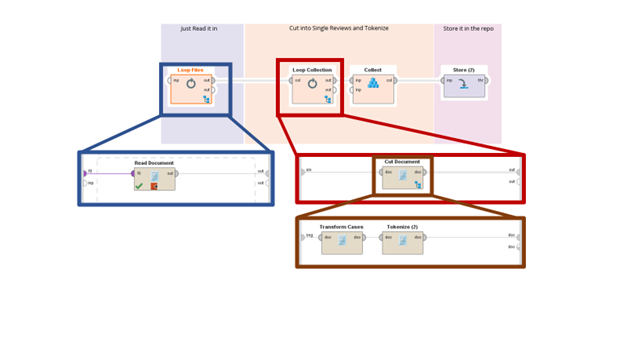

Our analysis is split in three parts. The first part reads in the data and transforms it into a collection of documents. Each document is already tokenized. The second process will then create a Word2Vec model on it, and the final third model is generating a stemming dictionary.

Step 1: Read and Tokenize

The data is provided in one flat file for each hotel with the following structure:

<Overall Rating>4

<Avg. Price>$302

<URL>http://www.tripadvisor.com/ShowUserReviews-g60878-d100504-r22932337-Hotel_Monaco_Seattle_a_Kimpton_Hotel-Seattle_Washington.html

<Author>selizabethm

<Content>Wonderful time- even with the snow! What a great experience! From the goldfish in the room (which my daughter loved) to the fact that the valet parking staff who put on my chains on for me it was fabulous. The staff was attentive and went above and beyond to make our stay enjoyable. Oh, and about the parking: the charge is about what you would pay at any garage or lot- and I bet they wouldn't help you out in the snow!

<Date>Dec 23, 2008

<No. Reader>-1

<No. Helpful>-1

<Overall>5

<Value>4

<Rooms>5

<Location>5

<Cleanliness>5

<Check in / front desk>5

<Service>5

<Business service>-1

We read all files in with a Loop Files + Read Document combination, and then loop over all documents to extract only the content with a Cut Document operator. In the Cut Document we quickly transform all tokens to lower case and tokenize our document. After flattening the collection to one straight collection of documents, we store it in our repository for later use.

Read In Process

Read In Process

Step 2: Train the Model

Training a Word2Vec model is straightforward: get the data, apply Word2Vec, and store the result. The layer size, which defines the length of one vector, is set to a moderate 100 and the window size is set to 7. The iterations parameter is set to a high 50, which should ensure convergence. Training Process

Training Process

Step 3: Building the Stemming Dictionary

Building the final dictionary needs a tiny bit of postprocesseing. The new operator Extract Vocabulary is able to extract vectors for all or parts of the used corpus. Using Cross distance it is possible to get the distance between to word vectors measured in cosine similiary.

In the postpocessing we first need to remove duplicates of words which were created in the cross distance.

Afterwards there is a different type of duplicates. These are the ones were the first word in the first example equals the second word in the second example and vice versa.

Word1 Word2

Gorgeous Beautiful

Beautiful Gorgeous

The final processing process with a postprocessing which creates a stemming dictionary

The final processing process with a postprocessing which creates a stemming dictionary

Finally we apply a threshold on the similarity to produce a well-pruned list. This is controlled with a macro and can thus also be used from the outside. The only thing we need to make sure is that a word is not a synonym more than once. We can do this by removing some additional duplicates.

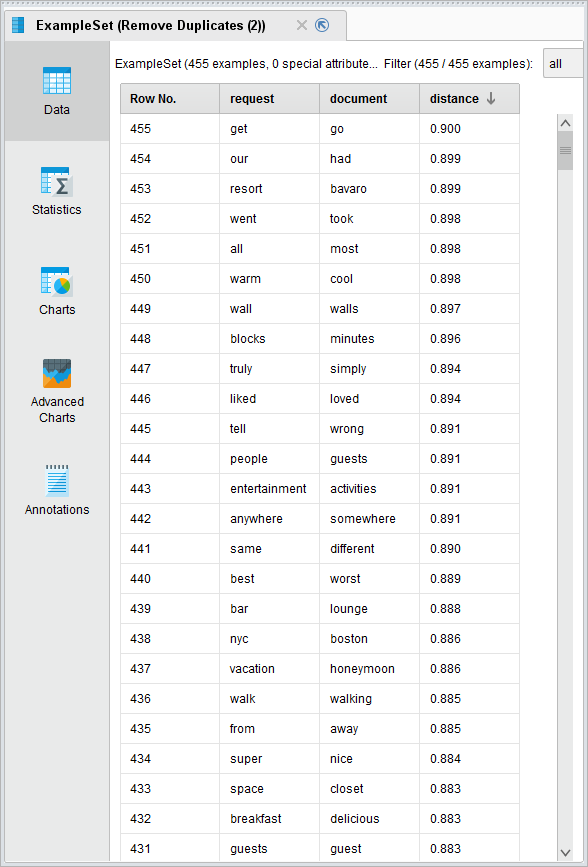

Let's have a look at the results! Examples for found synonymsIf you examine the results you can see some obvious similarities like wall and walls, and some more clever synonyms like people and guests, anywhere and somewhere.

Examples for found synonymsIf you examine the results you can see some obvious similarities like wall and walls, and some more clever synonyms like people and guests, anywhere and somewhere.

Where it gets interesting are that sometimes words with opposite meanings are considered synonyms (best-worst, warm-cool etc). This is due to the way Word2Vec works in that these words can be put into the same gaps – hence considered similar to each other. Depending on the task you do this can be useful (e.g. topic recognition) or detrimental (e.g. sentiment analysis). For the latter you need to manually walk through the result list and prune more.

As a last step we can use an Aggregate operator in combination with a Generate Attributes operator to generate regular expressions. For example:

|

amazing:awesome |

|

american:european |

|

amsterdam:berlin |

|

and:very|with |

|

another:later |

|

anywhere:somewhere |

|

appointed:maintained |

|

area:areas |

|

arrived:checked|arrival |

|

asked:requested|ask |

The format can be used on any document you have. The operator for this is called “Stem Tokens using Example Set” and is part of Operator Toolbox extension.

Where can I learn more?

Dortmund, Germany

Comments

Thanks very much @mschmitz

for this fantastic process, Just experimenting now.

If I want to analyse a group of documents and find not only the single words that have a vector relationship, but also bigram, trigram phrases is that possible? Or does it melt your computer...

Can this be combined with any other text processing or modified to produce topical buckets of terms?

I was wondering if it is possible to split the input documents by punctuation.

I am inputting webpages that have headings etc., at present I am stripping out stop words, short strings 4 letters.

Therefore, I end up with just long strings.

However, was thinking if I split each document by sentence or paragraph/ list content? I could then create many separate documents (from one html page) that could be classified or grouped by similarity.

Using document to similarity to process those buckets of sentences.

I would get words output in the dictionary of Word2vec that are not just related to each other, but related to the concept (as defined by the documents to similarity groupings of sentences or lists extracted from the html document.)

I am probably not thinking correctly about it.

My goal to end up with buckets of words that could be then used in construction of paragraphs within a new written document that are known to be related by vector space. Not only to each other, but also to other words within the topical buckets.:smileyhappy:

(The buckets being defined by the pre-processing using documents to similarity) rather than just individual words related to each other.

I used the ITF/TO before and that works ok to find bigrams and trigram strings to get them on the page.

However, the problem is the same you end up with the phrases on the page, but not necessarily near to each other.

It works, concerning creating statistically similar page (google), but its very time consuming with lots of manual pruning.

Then you have to post process your document for synonyms to ensure you have not overegged it.

I would like to create some sort of process that stitches several processes together ITF/TO Word2Vec, Document clustering, LSI to produce some sort of master grouping of words.

That way it would just be a matter of taking that grouping of n words and forming a meaningful paragraph out of it.

Knowing in advance that it has ticked all the boxes.

I purchased the book, not picked it up yet

was alos looking at this. lda2vec

https://multithreaded.stitchfix.com/blog/2016/05/27/lda2vec/#topic=5&lambda=1&term=

is this possible in rapidminer??

regards lee

Hi @websiteguy,

first of all: thanks for the kind words and using the operator. It is always cool to see, when people use the tools you write.

Let's go through your questions a bit

Word2Vec by itself does not support bi_grams. But maybe you can find frequent bigrams using process_documents and use Replace Tokens to then replace e.g. not good with not_good which is then considered as one word in Word2Vec.

Sure, Cut Document should do the trick.

You can treat whole sentences as words in the operator. This also includes things like tags or parts of code. The only thing i would be worried about is, that you need enough sample size.

I would consider to cluster the vectors with some cosine similarity measure.

Never saw this before, but thanks for the link! This is not yet supported but we may investigate this. The LDA vis package for python seems to be a good ressource for the recent LDA operator i published in toolbox.

Cheers,

Martin

Dortmund, Germany

Hi @mschmitz

Thanks for the quick reply,

By stripping out stop words and turning in to bi-grams, or tr-grams, create a document, then collect and save?

Then process these strings of two or three words with the connecting _ and they would each would be a string used in the vector, is that right?

-----------

I am trying to create a new document

That has a statistical similarity to the original set of documents, by including these word2vec results in its creation.

(I have found the ITF/TO works but it does not allow for distance, so you have to slavishly ensure the inclusion of bigrams/tri-grams that occur in the original documents to ensure similarity. Even then, you have to return to your document at later date and shift the usage of the strings about to get the nearness to other bigram strings.

...

If clustering were done on our newly created document, the original set of documents and a random set of other docs, the new doc would fall in to the same cluster as the original set as it "is like" the original set.

---------------------------

At present, the vector interpretation of documents produces words from a set of documents that co-occur (by a distance of K words/synonyms from each other) therefore these words have a relationship. Is that correct?

So when processing documents, we get a list of words and co-occurring examples of words, that acts as a representation to commonalities of word usage as defined by 'K' distance (the stemrule)

It word2vec helps us to know we should include, "acne|naturally|grab” in to sentence in our new document.

"For suffers of acne, I would always treat it naturally, that’s why I suggest you grab a copy of my new book"

However, not how near this sentence should be to another sentence that includes another stemrule?

So if I used another stemrule in a sentence:

"It’s absolutely vital that when keeping an injury or trauma protected we act quickly to ensure the bone does not shift"

These two new sentences could be in the same paragraph or distant from each other in the new document.

Is there any way to know this "nearness of stemrules"? So that the vector stemrules are used in a way that insures their nearness to other stemrules is takes in to account stemrules distance from other stemrules?

Therefore, we get "grouped stem rules" and therefore our new document we produce is more "like the originals"

Or, is this essentially what lda2vec is doing?

----------------------

"I would consider clustering the vectors with some cosine similarity measure"

Any chance you could show me how to do this, or explain a little further?

thanks fr your help,

regards lee

-----------------

There seems to be a lot of questions floating on the Community on how to use Word2Vec with Twitter data. I made a fast and dirty process on how to do it here.

Now, if you want to do some proper textmining, it means this data needs to be converted to documents (so text format). There are quite some operators with different options for the job, so it al' depends on what you actually want/need to do, and how your excel is constructed.

Dortmund, Germany

Dortmund, Germany

Dortmund, Germany