Association Rule Creator - PDF files - Memory limitations

Learner III

Learner III

Hi,

I am newbie here. Let me give you an overview of what I am doing.

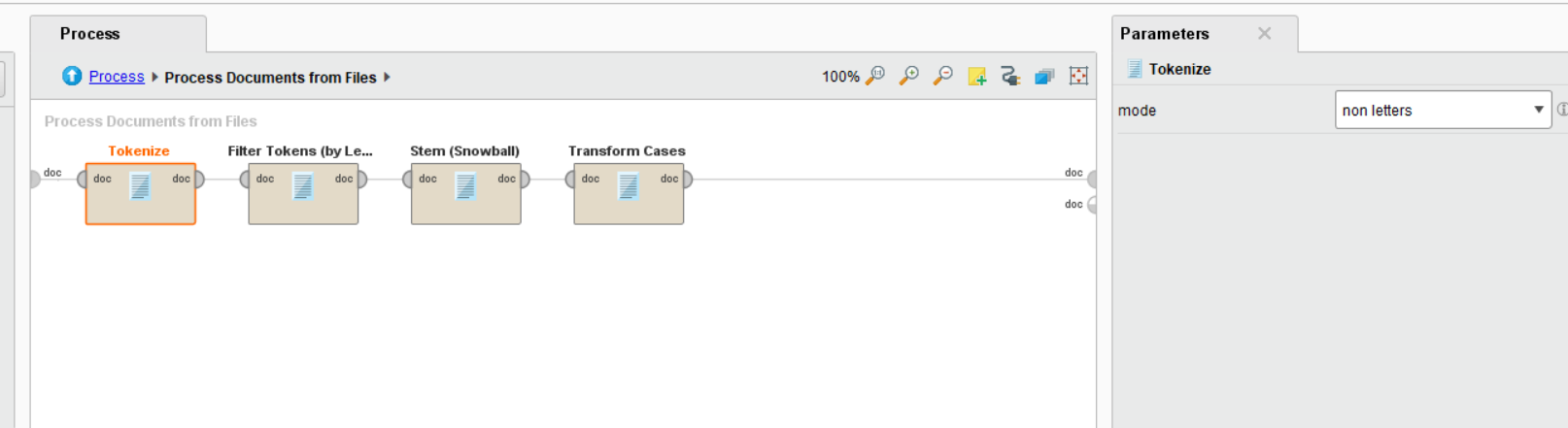

I am processing PDF files (generally trend reports) and want to create association rule for them. As I saw a tutorial, where someone converted pdfs into txt files in order to process it. I converted thoese PDF's online to text files and tried to excute them. I did it like this way:

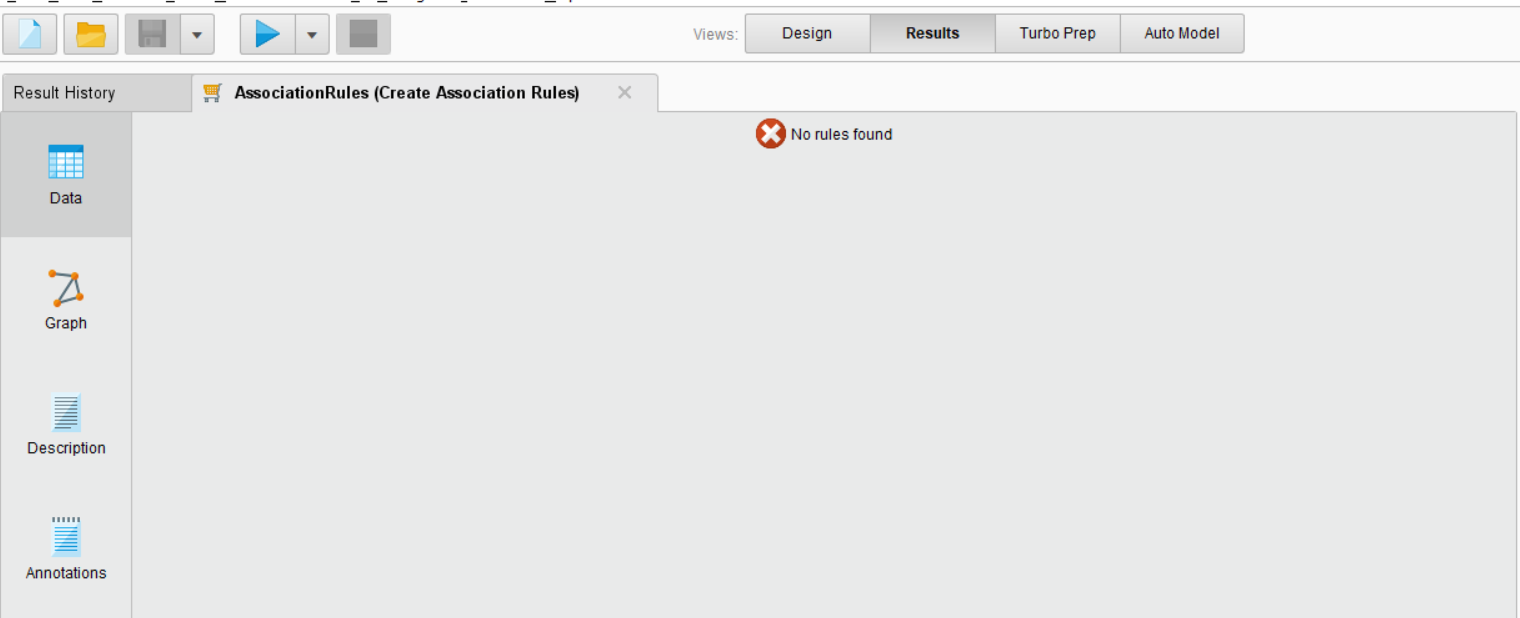

First, I didn't got any association rule in a result.

Then I tried to play around with the parameters of text processing, changing "PruneMethod" absoulte to percental or degree, but I got memory error, even though I have around 450GB free space. And those converted PDF files are only 7.

Please guide me how to get association rules.

Kind Regards,

Rashid

Best Answer

-

lionelderkrikor

RapidMiner Certified Analyst, Member Posts: 1,195

lionelderkrikor

RapidMiner Certified Analyst, Member Posts: 1,195  Unicorn

Unicorn

Hi again @rashidaziz411,

I must admit that I am a little lost : By testing the first version of the process (the process that works with .TXT files),

with your 7 .txt files, a dataset with more 7500 attributes is generated .... and the process has .......no problem to generate

the association rules......(the calculation of the association rules is instantaneous !).

I can't explain this behavior.

In conclusion, I would say, that, as palliative solution, you can convert all your PDF files into TXT files...

Regards,

Lionel

1

Answers

Hi @rashidaziz411,

Can you share your process (Cf READ BEFORE POSTING / §2. Share your XML process) and your files

in order we better understand.

Have you try to play with the parameters of Create Associations Rules operator ?

Regards,

Lionel

Hi @lionelderkrikor,

Thank you for your response. Yes! I tried that too but didn't succeeded.

Hereby are the files.

1. Process (workflow)

2. Number of files, which I am working on.

Kind Regards,

Rashid

Hi @rashidaziz411,

I just checked your process and your files.

A priori your Process Documents from Files operator doesn't produce any output (it doesn't extract the words from your .txt files)

I will continue to search...

Regards,

Lionel

Hi again @rashidaziz411,

I found some association rules (with 2 of your files : Accenture and Deloitte) by setting :

- in the parameters of FP-Growth operator, min requirement = Frequency / min frequency = 1

Like you will see, the Support of these associations are very close from 0...

I hope it helps,

Regards,

Lionel

NB : I redesigned your process with the Loop Files operator because I had difficulties with the Process Documents from Files operator.

The process :

Hi @lionelderkrikor,

I have changed the parameters for fp-growth to 1 (for frequency), but it's still not working.

I didn't understand the thing which you have described as "redesigned process" ? How to do that? Does it has to be operated through "CMD" or some kind of coding? If so, I am unable to open "cmd", I don't know but and I tried.

Or this "redesigning process" can be done through GUI?

P.S why this "process document from files" is not producing the output? Is there a problem with files or this node? Can't be it done through PDF files directory (without converting them to .txt) ?

I would love to hear from you.

Kind Regards,

Rashid

Hi @rashidaziz411,

Like I said, Process Documents from FIles operator doesn't produce any output and I don't know why.

So I decided to explore an other solution to "extract" the words : Using Loop Files + Process Documents from Data.

To import the process I shared (the XML file) in my previous post, you have to follow these steps :

1. Activate the XML panel :

2. Copy and paste the XML code in the XML panel :

3. Click on the green mark :

4. This is it.. the process appears in the process window...

Regards,

Lionel

Hi @lionelderkrikor

Thank you for your detailed answer and I am glad that it's showing some association rules. As I have changed the directory location for "loop files" to the folder of text files at my PC only and its generating rules.

But I have some questions regarding this process:

1. If we look into XML process (the one you shared above), at LINE 37, you are applying filters to two files (accenture and delotte) only, why not all those 7 files? As for me, I only shared 7 files but I have 31 files to work on. So, if I have to do on all of these files, do I have to mention in the same way like you did?

2. At LINE 41 of XML process, you mentioned parameter value for only one file (accenture), why is that only? As at LINE 37, you mentioned 2 files. And how you did that, by manually typing in XML process or through some node from GUI?

3. At LINE 11 of XML process, you are mentioning to which parameter value? Because I have no Idea which directory path you are mentioning there? Path of the text files' folder or something else?

4. As both these parameter values (LINE 11 and 41) are still the same and reffering to your path directory (C:\lionel\......) and both remained same when I executed the association rules. Do I have to change them manually from XML or is there any filter somewhere in GUI like just I did in "loop files" to changed the path from directory option by just clicking?

Kind Regards,

Rashid

Hi @rashidaziz411,

First, as general rule, XML files are just for sharing processes between users. Don't make any modification in these files. Import the processes into RapidMiner and work always in the GUI of RapidMiner.

Here the "general" process :

- To sum up, after importing this process into RapidMiner, you have just to :

I hope it helps and that this process answers to your need.

Regards,

Lionel

NB : Indeed, I was applying filters to just two files (accenture and delotte) just for testing, now the filter is based on the .txt files, so the process will loop over all your .txt files inside the set path (the path where you stored all your files).

NB2 : There is a path, that you mentionned that was part of the Process Documents from Files operator which was disabled. Now this operator has been deleted and doesn't appear anymore.

Hi again @rashidaziz411,

Here an experimental modified version of the previous process to work directly with .PDF files.

Like the previous process, you have to set the path, in the Loop Files operator's parameters, where your .PDF files are stored.

The process :

Regards,

Lionel

Hi @lionelderkrikor

Thank you for your help. First solution which you have pointed (in text files), thats working fine. Whereas, second XML process (experimental on PDFs), it's pointing error in "LOOP FILES" parameter that it can't read.

Kind Regards,

Rashid

Hi @rashidaziz411,

I tested this process with some of my .PDF files and it works fine : I'm not able to reproduce your bug.

Can you share some of your "problematic" .PDF files ?

I think that this issue is related to the encoding method of the Read Document operator.

In parallel, can you try other methods ?

Regards,

Lionel

Hi @lionelderkrikor

I was getting prvious error, when I mentioned the path of all the files "combine" (32 files in number), giving path to the folder which contains all these files.

After your latest response, I have changed the path to 1st folder only (consultancy). But now, its giving me memory error just after 3% generating association rules, only for consultancy folder (which contains 7 files, the one which I shared with you as text files) ~I have about 25 GB free space in C drive and 450 in D drive

I am attaching pdf files (only 7) 6 here.

edited: One file size limit exceded (Delotte)

Kind Regards,

Rashid

Hi @rashidaziz411,

I executed the process with your 6 .PDF files : I face to the same problem as you...

But it is not a problem of storage, it is an insufficient RAM problem. (personally I have 16 Go of RAM on my PC).

After investigations, it's not surprising because, with these 6 files, you have a very large dataset : 6 rows and 4443 attributes !

(and with a little .pdf file (generating 10 attributes), the process works...).

So in conclusion, I'm afraid that you need more RAM on your computer to execute the process with all your files.

Regards,

Lionel

Hi @lionelderkrikor

Thanks for your help. I too have 16 GB of RAM but I will convert them to text files.

Once again, thank you and I really appriciate your efforts in this regard.

Best Regards,

Rashid

Hi @rashidaziz411,

You're welcome Rashid.

Good luck for your project !

Best regards,

Lionel

I experienced a similar problem with the memory issue. I used the same process as @rashidaziz411 the first time. It didn't even work with 4 txt files. I then used the process as suggested by @lionelderkrikor, and everything seems to work great.

However, I am not sure what "Loop files" (especially Read CSV, because it is a plain text file and not comma separated) and "Union Append" do in this case. Sorry for the ignorant question. I'm rather new to this.

Thank you

Cheers,

Indi

Loop Files operator is reading the text of the .txt (or .pdf) files which are stored in the specified directory.

You're right "Union Append" subprocess is not necessary in this case : you can only use "Append" operator.

This operator is used to "aggregate" the different files in one example set.

To better understand, set a "BreakPoint After" in the Loop Files operator and in the Append (or Union Append operator).

I hope it helps,

Regards,

Lionel