Parsing JSON in RapidMiner using the WebAutomation Extension by Old World Computing

The WebAutomation Extension by Old World Computing

Defining a format to extract a simple table from JSON data

So how exactly does it work? As an example, let’s say you are provided with data about books in JSON format. The data comes from an internal database where it is stored as a JSON string.

{

"books":[

{

"title":"Java ist auch eine Insel",

"subtitle":"Einführung, Ausbildung, Praxis",

"authors":[

{

"first name":"Christian",

"last name":"Ullenboom"

}

],

"language":"German",

"edition":"13., aktualisierte und überarbeitete Auflage",

"publication date":2018,

"publishing company":"Bonn Rheinwerk Verlag",

"keywords":[

"Java Standard Edition 8",

"Java Standard Edition 9",

"Programming"

]

},

{

"title":"The elements of statistical learning",

"subtitle":"data mining, inference, and prediction",

"authors":[

{

"first name":"Trevor",

"last name":"Hastie"

},

{

"first name":"Robert",

"last name":"Tibshirani"

},

{

"first name":"Jerome",

"last name":"Friedman"

}

],

"language":"English",

"edition":"2. ed., corr. at 5. print.",

"publication date":2011,

"publishing company":"NY Springer",

"keywords":[

"Machine Learning",

"Statistics"

]

},

{

"title":"Learning Spark",

"subtitle":"lightning-fast data analysis",

"authors":[

{

"first name":"Holden",

"last name":"Karau"

},

{

"first name":"Andy",

"last name":"Konwinski"

},

{

"last name":"Wendell"

},

{

"first name":"Matei",

"last name":"Zaharia"

}

],

"language":"English",

"publication date":2015,

"publishing company":"Beijing [u.a.] O'Reilly",

"keywords":[

"Machine Learning",

"Cluster",

"Big Data"

]

}

]

}

Looking at the JSON, you will see that there are several relations within the data that cannot be expressed as a simple table. For example, there can be various author objects per book. For now however, we are only going to extract the tabular data, but the extension can easily extract these relations as separate, relational tables or denormalized with duplicated entries.

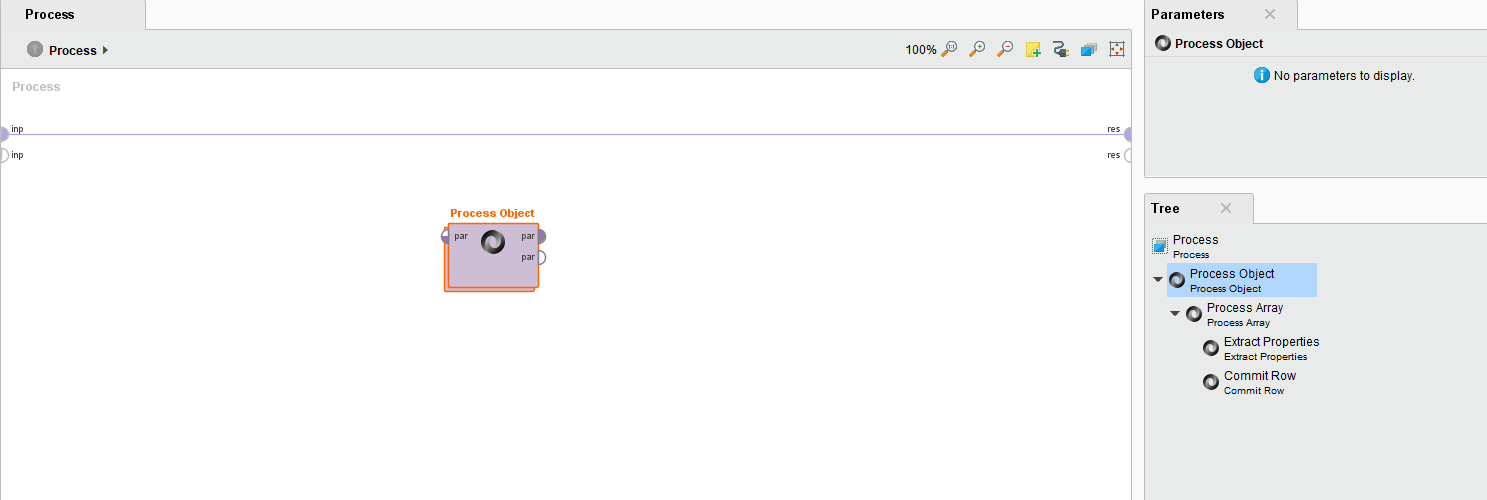

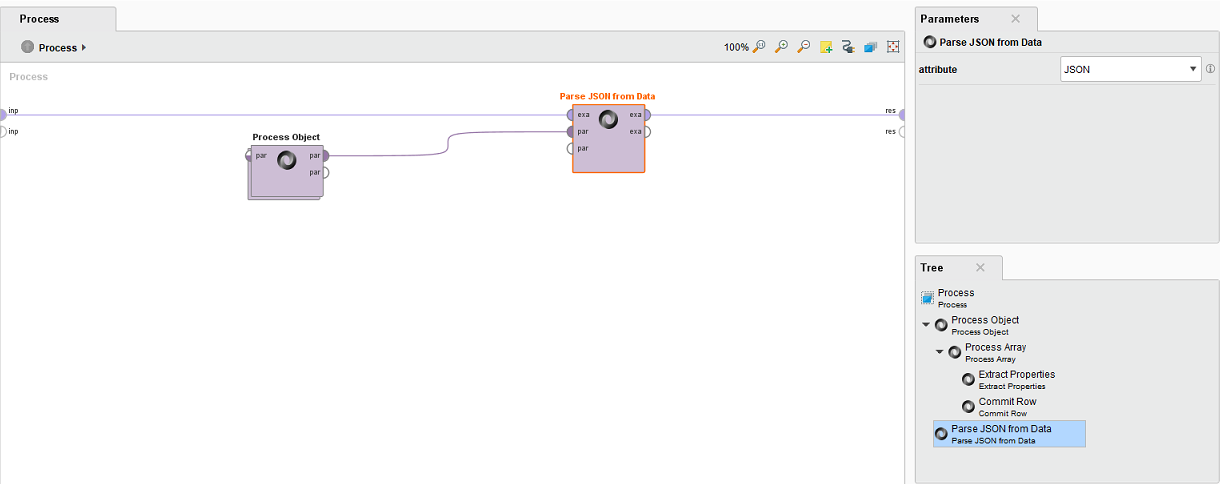

As can also be seen in the tree view to the right, the extension uses operators with subprocesses to model the JSON’s structure. There is an Array operator within the Process Object operator, which in turn houses Extract Properties and Commit Row operators.

Together, these operators define the structure of how data is extracted from the JSON file. Process Object and Process Array will tell the parser to enter the object or the array. As the JSON always starts with an object or an array, the outermost operator will not contain any parameters. However, note that inside of this outer operator, there can be further Process Array and Process Object operators which will have parameters, mirroring the JSON structure.

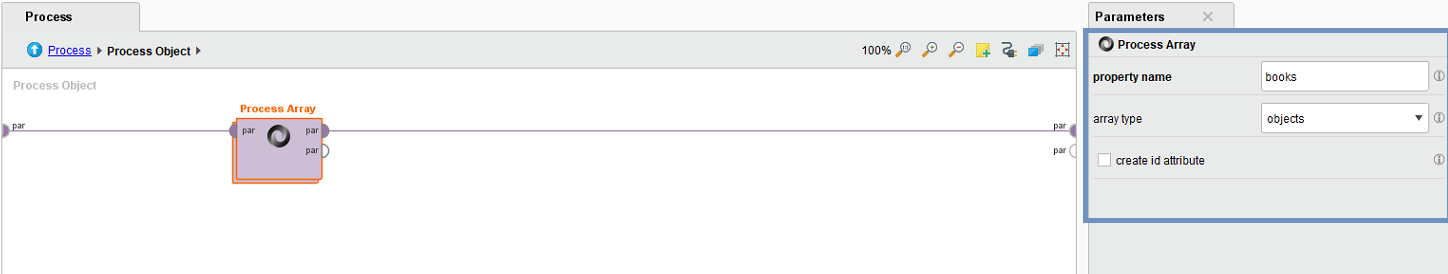

Let’s see how this looks for the inner Process Array operator:

Here, we enter the „books“ property and define it to be an array of objects. Whatever happens now inside the Process Array operator will react to the inner objects.

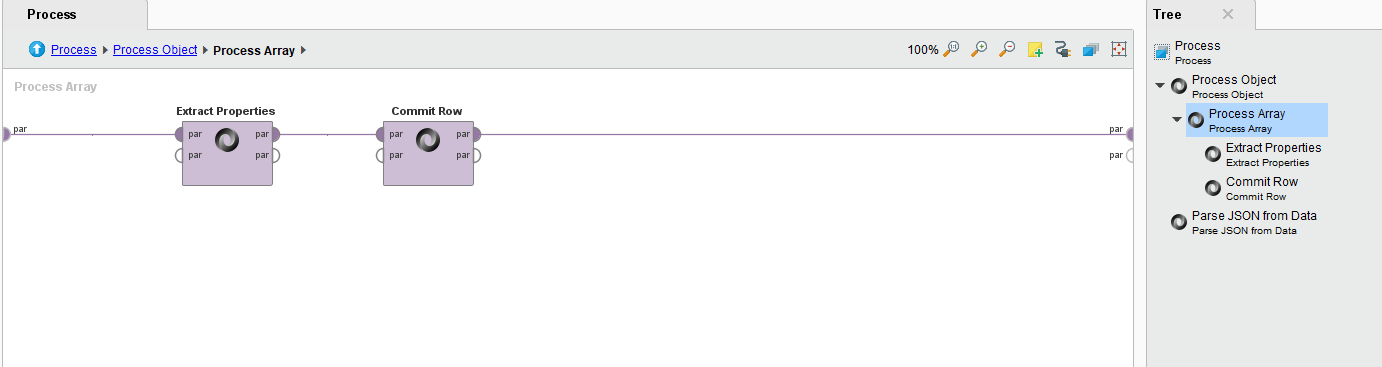

Taking a look at the inner process of Process Array, we see two operators: Extract Properties and Commit Row. Both define what is supposed to happen when the parser finds an object within the book array of a given JSON. In short, this process defines that the parser is supposed to extract some properties and store them as Attributes in the resulting example set and then finish a row in the resulting example set when the object is processed, meaning we can extract any information from properties of the current object that have scalar values like strings, numbers or booleans. Once we have done that, we can commit the row, so that each object is represented by a row in the resulting example set.

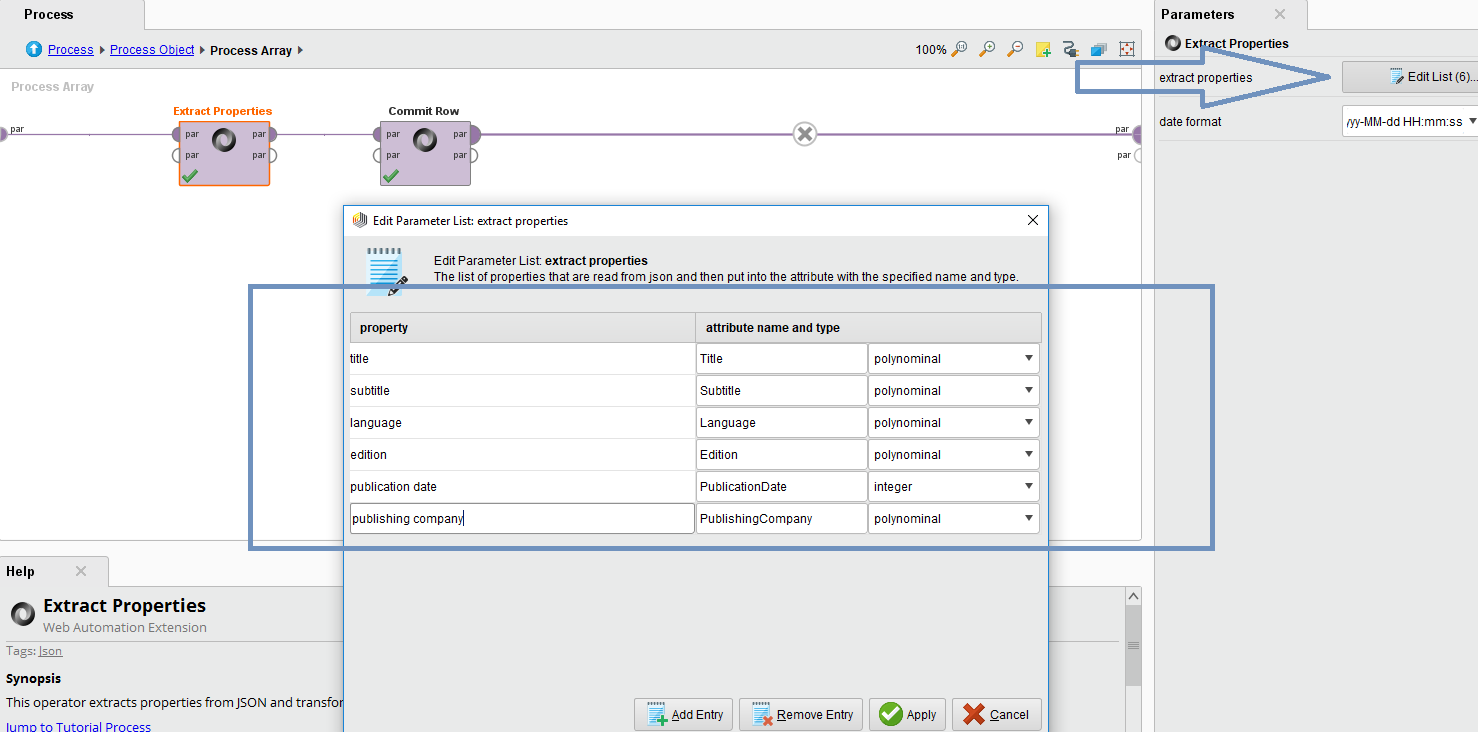

In this case, the objects in books are provided with titles, subtitles, and more detailed information such as language or publication date. To extract these properties, we configure the extract properties parameter in the Extract Properties operator: under “property”, we set the name of the property. In this case title, subtitle and so on. On the right under “Attribute name and type” we can freely assign a name of an attribute and its type. When the definition is used for parsing, the result will contain this attribute and the information will be stored in it.

Please note that the specification object needs to be passed to the outputs of the Process Object and Process Array operators. There is always one input and multiple outputs, but you at least have to provide one output. You can get the final specification on the outermost Process Object or Process Array operator.

Use a parser specification to parse JSON data

In the next paragraph, we will see how we can use the specification created in the last paragraph to actually parse a JSON. We will continue the scenario where we got the book data from a database table. All we need to do now is to add a Process JSON from Data operator, feed it with the data and the parser specification and tell it which Attribute contains the JSON data.

The parser will then take the input specification(s) and generate one data set per specification.

You can use the same specification with the various other parse operators. There’s one to parse JSON from files and file objects, coming in very handy when used in connection with the RapidMiner Scoring Agents or RapidMiner Server’s web services. You can also use JSON delivered by a macro, which finds its application mainly in the deployment as web service or in the web application building. And last but not least, the integration with the new operators to interact with RESTful web services allows to parse web service responses directly without overhead.

Contributor II

Contributor II

Answers

Lindon Ventures

Data Science Consulting from Certified RapidMiner Experts

You can contact us in the mail id somnath@datafotis.com