The Altair Community is migrating to a new platform to provide a better experience for you. In preparation for the migration, the Altair Community is on read-only mode from October 28 - November 6, 2024. Technical support via cases will continue to work as is. For any urgent requests from Students/Faculty members, please submit the form linked here

how to get performance of test data where the label has no values

Maven

Maven

in Help

Hi All,

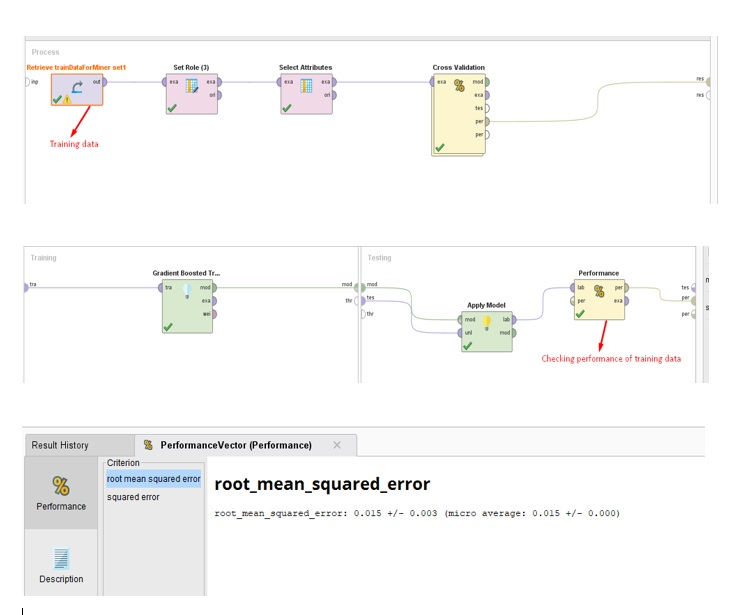

I tried to find out how my model is performing on Training data and I was able to do it successfully

Now I wanted to see how it is going to perform on test data so I added another apply model and performance and of course my test data like below

I got below errors: That's probably because my "label parameter" is blank in "test data" as I wanted to see what values it will predict....... I am able to get results of prediction but to see how my model is performing on completely new set of data with no values in label.... can we do that if yes then how?

if I am trying to put "Set Role" in between "Apply model" and "Performance" I am able to set that predicted variable as my "label" which is not right because that predicted variable column is not present in the original test data so that's not working

I tried to find out how my model is performing on Training data and I was able to do it successfully

Now I wanted to see how it is going to perform on test data so I added another apply model and performance and of course my test data like below

I got below errors: That's probably because my "label parameter" is blank in "test data" as I wanted to see what values it will predict....... I am able to get results of prediction but to see how my model is performing on completely new set of data with no values in label.... can we do that if yes then how?

squared_error: unknown

root_mean_squared_error: unknown

if I am trying to put "Set Role" in between "Apply model" and "Performance" I am able to set that predicted variable as my "label" which is not right because that predicted variable column is not present in the original test data so that's not working

Tagged:

1

Best Answer

-

User111113

Member Posts: 24

User111113

Member Posts: 24  Maven

Yes. Nov 19 data was not in training set but I feel I have very limited options on how many models I can run. I see only 3 models, mostly 2 GBT, & random forest to work with my data as it has only 1 real/int variable which is response rate and all others are polynomial.2

Maven

Yes. Nov 19 data was not in training set but I feel I have very limited options on how many models I can run. I see only 3 models, mostly 2 GBT, & random forest to work with my data as it has only 1 real/int variable which is response rate and all others are polynomial.2

Answers

Varun

https://www.varunmandalapu.com/

Be Safe. Follow precautions and Maintain Social Distancing

Thank you for your response.

My next step was to run it without performance and save the results in an excel file then I ran that excel as an input to the same model to see the error rate and it came as 0. Can you tell me why?

Please see below screenshot

The result set that was generated above was from the model and feeding same data to the model obviously would show 0 deviation.

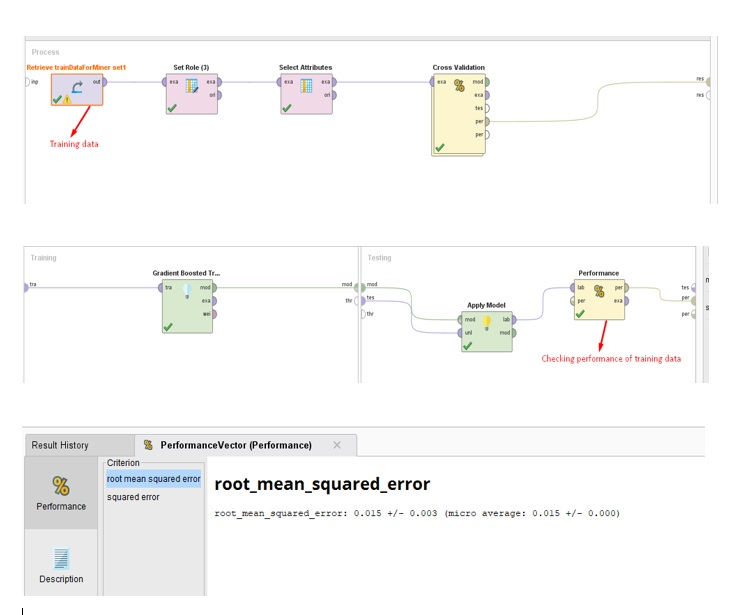

Now I put original data for example I predicted response rate for Nov 2019 and I already have the actual/original so I fed that as an input to see how much the result set would deviate from original and I got root mean squared error as 0.016

which isn't bad what do you think?

Varun

https://www.varunmandalapu.com/

Be Safe. Follow precautions and Maintain Social Distancing

Lindon Ventures

Data Science Consulting from Certified RapidMiner Experts