The Altair Community is migrating to a new platform to provide a better experience for you. In preparation for the migration, the Altair Community is on read-only mode from October 28 - November 6, 2024. Technical support via cases will continue to work as is. For any urgent requests from Students/Faculty members, please submit the form linked here

Limits on rows - Truncated data set - Missing - Non-missing data

Hello,

I am a newbie. Downloaded RapidMiner Free Studio (my understanding is it comes with one month of studio Enterprise) and signed up for RapidMiner GO on Saturday (April 3rd). Watched all the Academy videos (if you are going to send me there ). Started playing around and I got to several problems.

). Started playing around and I got to several problems.

DIsclaimer - At the moment I am evaluating head-to-head performance of RapidMiner, Weka and Orange. Weka and Orange are free, RM Go cost at 10$ a month is comparable.

My understanding is that if file <50MB, <500 attributes file should be good to go on RapidMiner Go.

So, I have a file of size <50MB, 11 attributes and 542 919 rows. No missing data in the dataset - not a single one.

I 've run a classification on it, but unfortunately, it got truncated down to 120 000 rows.

I have not gotten any notification in between.

I also did some predictive modeling and I get a lot of MISSING data (especially obvious when I predict on a testing set).

So far - this was GO.

For the first month of Free RM Studio, I get Enterprise Studio where there is no limit on anything ( in theory). My computer has 16GB of RAM (if somebody is to ask - not bad, not great either).

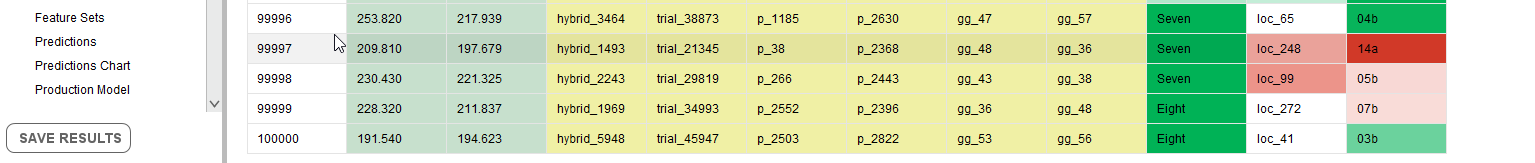

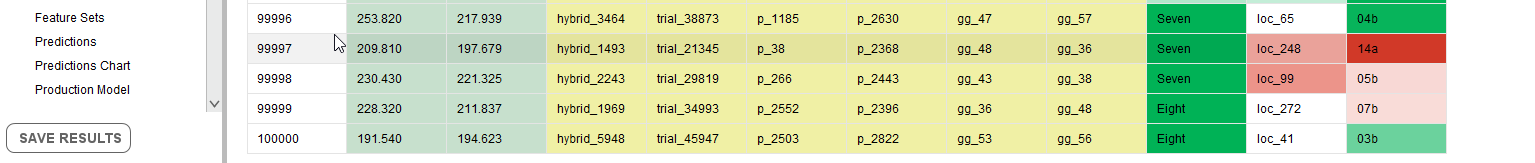

When I execute the same Modeling with AutoModel in Studio (I have Free Educational License with would come with a month of Enterprise Studio) I get even more truncated results - It all stops at 100 000 rows.

Even worse - there is not a single missing point in the dataset, but there are missing in the prediction.

So, my questions are: is the data truncated in Go? Is data truncated in Studio? Why Missing Values if there is not a single missing or NA cell in the dataset? How can I overcome this if I am to use RM in the future?

Thank you so much,

Radostina

I am a newbie. Downloaded RapidMiner Free Studio (my understanding is it comes with one month of studio Enterprise) and signed up for RapidMiner GO on Saturday (April 3rd). Watched all the Academy videos (if you are going to send me there

DIsclaimer - At the moment I am evaluating head-to-head performance of RapidMiner, Weka and Orange. Weka and Orange are free, RM Go cost at 10$ a month is comparable.

My understanding is that if file <50MB, <500 attributes file should be good to go on RapidMiner Go.

So, I have a file of size <50MB, 11 attributes and 542 919 rows. No missing data in the dataset - not a single one.

I 've run a classification on it, but unfortunately, it got truncated down to 120 000 rows.

I have not gotten any notification in between.

I also did some predictive modeling and I get a lot of MISSING data (especially obvious when I predict on a testing set).

So far - this was GO.

For the first month of Free RM Studio, I get Enterprise Studio where there is no limit on anything ( in theory). My computer has 16GB of RAM (if somebody is to ask - not bad, not great either).

When I execute the same Modeling with AutoModel in Studio (I have Free Educational License with would come with a month of Enterprise Studio) I get even more truncated results - It all stops at 100 000 rows.

Even worse - there is not a single missing point in the dataset, but there are missing in the prediction.

So, my questions are: is the data truncated in Go? Is data truncated in Studio? Why Missing Values if there is not a single missing or NA cell in the dataset? How can I overcome this if I am to use RM in the future?

Thank you so much,

Radostina

0

Learner I

Learner I

Answers

Regards

I understand that ETLs are the reason for the assignment of the missing values, but the truncation - I have no explanation of this. I get the first 125 000 rows and the rest are gone.

Also, when I apply the model on a new dataset (a testing dataset) in Go, the prediction results I get have nothing to do with the input testset I submit. It is like mix and match of colums and rows, with lots of Missing spread around. This is unfortunate, because I like the concept behind supporting and contradicting predictions - this alone is the best feature in RM from what I have seen so far.

I definitely cannot trust the RM results for the time being - would spend two more days exploring (until the trial of Go expires), but seems like I would not commit to the platform for now. There is better control on WEKA/ Orange/ Genie.

Apologies for the stupid question, but how do I share the model here?

This is the process - see attached. The process should equal the model.

Best,

Radostina

I heard you loud and clear in the previous comment, when you suggested the breakpoints and now I am doing that - hopefully, I will figure the points of data mishandling.

I still do not understand the truncation on Go and the mix and match of results, but for the time being - I will play around. The more I understand the process/ model, the better I will understand the modeling.

Thanks for your input.

best,

Radostina

It's a pleasure, we are here to help us each other's. The Automodel is a great tool but remember that you are the analyst and who has the business knowledge, It's important to understand the dataset preprocessing and validate it.

Good luck.