The Altair Community is migrating to a new platform to provide a better experience for you. In preparation for the migration, the Altair Community is on read-only mode from October 28 - November 6, 2024. Technical support via cases will continue to work as is. For any urgent requests from Students/Faculty members, please submit the form linked here

Ignore Deep Learning Errors!

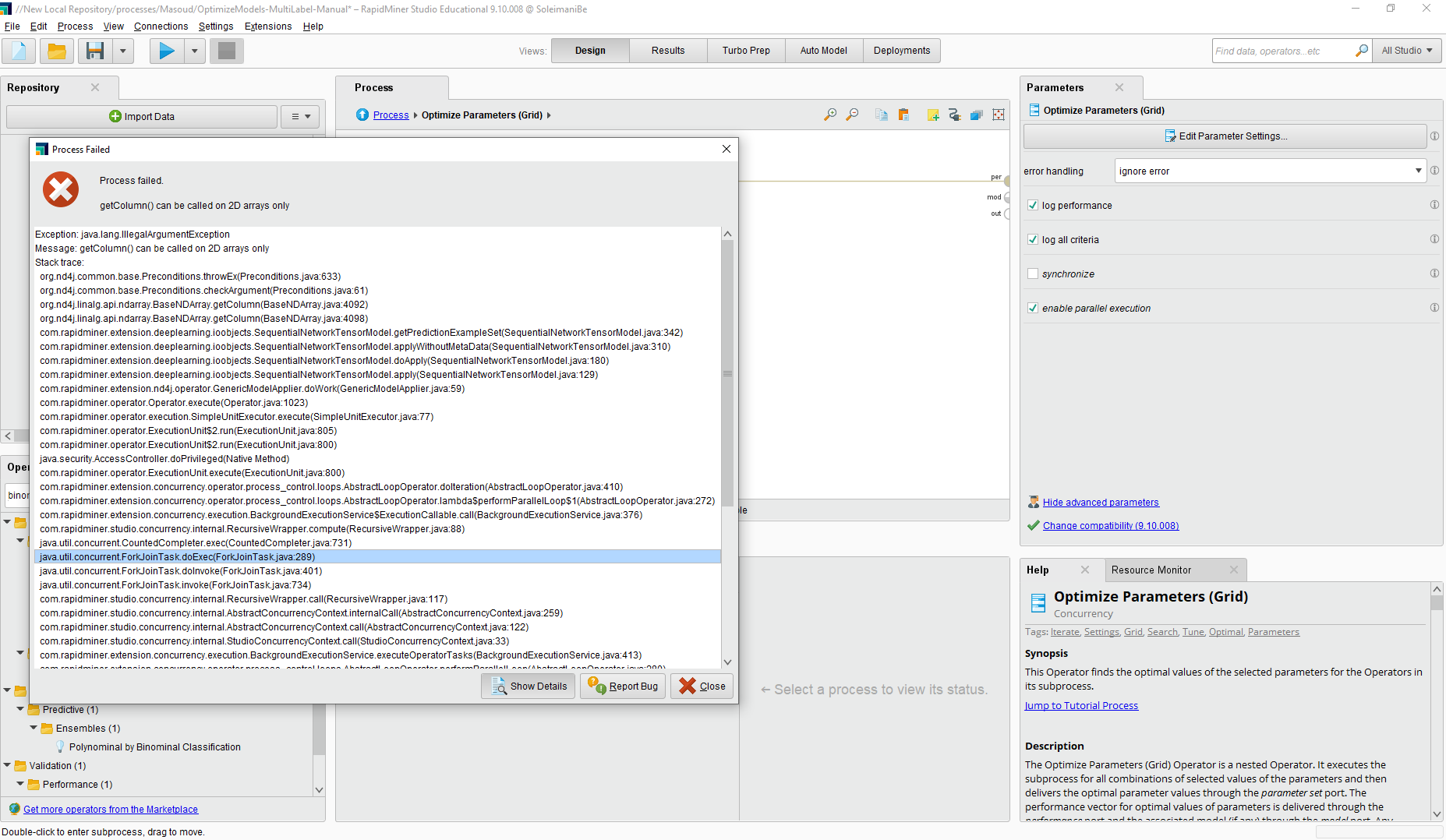

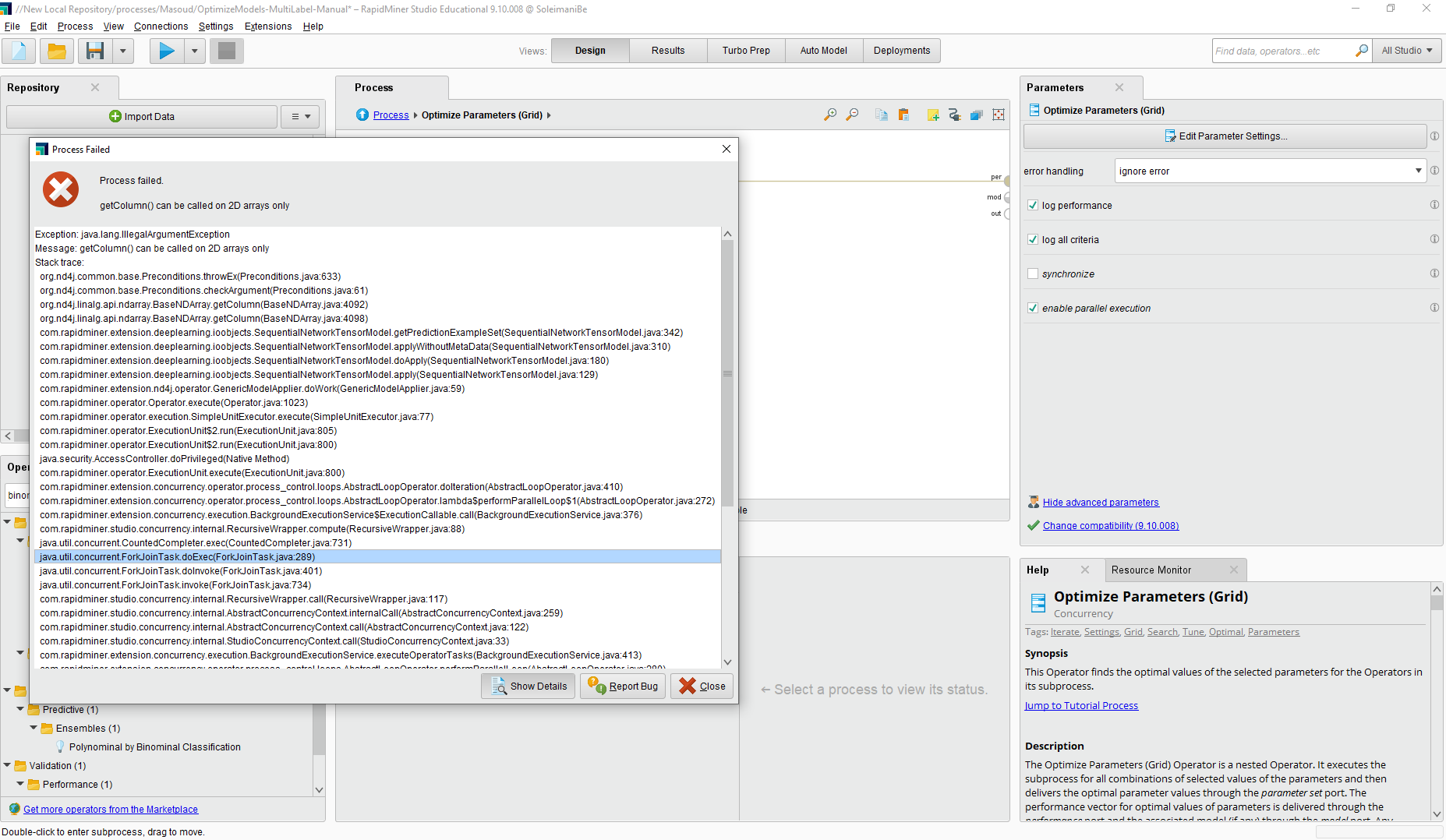

Unfortunately, the Deep Learning module can not send its exception gentle and it fails the entire process, so, I can not use it in Optimize operators like Gird Search, because the entire process will fail by a configuration error!

Besides, its errors are not clear and I can't understand how should I solve the problem usually there is no documentation or similar case because of various architecture and configurations for deep learning layers.

Besides, its errors are not clear and I can't understand how should I solve the problem usually there is no documentation or similar case because of various architecture and configurations for deep learning layers.

0

Contributor II

Contributor II

Answers

David

Thanks a lot for your attention.

I wrote the answer once but my browser closed (because of low memory during Rapidminer Run

I usually use Grid Optimization (GO) and put a cross-validation operator (CVO) inside it. Then I will put a machine learning algorithm like the decision tree (DT) or deep learning (DL) inside the CVO. Then I configure the GO to find the best hyperparameters, especially for logical and polynomial parameters like the criterion parameter in the decision tree algorithm.

When I do the above task for DL, I know that some values (options) are invalid like the least square for the DT's criterion, but I configure the GO to ignore these errors and it doesn't fail the entire process, but in DL, a misconfiguration causes the failure, and it doesn't throw an error. The GO can't ignore DL errors and I think it's because of bad coding and handling errors in DL. For example, the Try operator in the operator toolbox extension also can't handle DL errors.

If you want to know why I have misconfiguration, it is another story as the following if anybody can solve this problem, it is very good:

I am modeling a multi-class classification problem for time series. I have two problems with DL+tensorflow:

1) The DL+Tensorflow can not be used inside the multi-label operator (MLO), because MLO can't accept TensorFlow data as input and if I convert my TensorFlow to an example set and make the DL model inside the MLO, the output model has a different I/O versus MLO and MLO can't accept the output model! So, I had to implement the multi-label operator using the loop attribute myself. Please consider solving this problem for MLO (consider it as my second bug #bug_report).

2) When I configure the DL to do a binary classification, it seems that the Tensorflow or DL converts my input label (true/false) to numeric (0/1) and the DL wants to use regression instead of classification! So, as there is no specific documentation and tutorial for these special cases, I prefer to can configure the GO to find the best configuration that can be run and also in the next step, find the best parameters with higher performance. But, as I said before, the DL doesn't throw an exception in a misconfiguration scenario and fails the entire process. I need to see the Tensorflow data (at least a part of it), but there is no visualization for these operators (consider it as my third bug #bug_report).

I also attached my processes in which all of them have a training and testing dataset like the tutorial example set of DL (in //Samples/Deep Learning/data/ICU Subsample Training), but my dataset has a multi binary label:

1- OptimizeModels-MultiLabel: Using GO and MLO which have the second bug

2- MultiLable-Manual: Has no bug and it works for me but I need to optimize it, and it has a logical problem that it converts my binary label to a numerical label and I have to convert the final prediction to a binary label using numerical to the binomial operator. I can't understand why which operator convert my label to numerical and if I had a visualization output from the Tensorflow, I can't understand whether the problem is from the "Timeseries to Tensorflow" operator or the DL operator? (the third bug)

3- OptimizeModels-MultiLabel-Manual: It contains the GO + my manual implementation for multi-label handling which fails when I configure to run the process with various parameters (the first bug).

I really like to can help you in bug triaging but I don't know how can I find these problems in the code (where are related codes) and I am not familiar with your architecture, data models, and logic. Besides, many of these problems are in extensions. But, I will try to read the RapidMiner source code repository on Github and try to help you in the future.

Sincerely

David